This is the fifth post in a series discussing the advances scientists and engineers have been making in automation and robotics and the possible effects they could have on human society. (See posts of 2Sept16, 12Feb17, 17Jun17 and 8Sept18)

Now just to remind everyone, I’m very much in favour of having robots take over the monotonous drudgery that wastes so much human life. At the same time however I’m fully aware that in the short term many people lose their jobs and are left destitute by automation. It’s a shame that our political leaders are so blinded by their own infighting that they seem to be completely aware of these changes in the society it is their task to govern.

I have three, very different items related to robotics to discuss and I think I’ll start with a little robot who has learned how to navigate autonomously the same way that desert ants do. The little six legged robot, see image below, is called AntBot and it has been developed by a team of scientists and engineers at Aix-Marseille University in France.

In order to find food ant colonies have certain members called foragers who lay down a chemical trail that not only allows them to find their way home but also enables other colony members to find any food source the forager has located. In the hot, dry Sahara desert however that chemical trail is quickly burned away by the Sun leaving the forager without a way to get home before it too is burned away by the Sun.

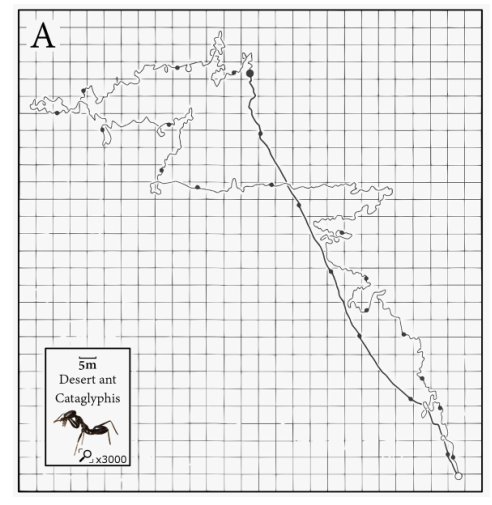

The ant species Cataglyphis fortis has evolved to use the Sun itself to solve that problem. Because of the scattering of sunlight by the atmosphere the light in the sky becomes more polarized the further away from the Sun you look, reaching a maximum at an angle of 90º. The ant’s eyes allow it to see something we humans cannot, the degree of linear polarization (DoLP) of the light across the sky. This information gives the ant a heading as to where it going and by counting its steps the ant knows where it is in relation to its home. Thanks to that knowledge, the ant is able to zigzag across the ground on its search for food and still make a beeline for home when it needs to, see image below.

AntBot is designed to navigate in the same way. Two UV sensitive sensors are mounted at its top beneath polarizing material, see image below. This gives AntBot the same heading information as the desert ant enabling it to replicate the ant’s navigational skill, see second image below.

The researchers at Aix-Marseille University hope to use their navigational system as both a backup for GPS as well as a navigational system for other autonomous robots. Another example of how robots are gaining an ever greater sensory idea of the world around them.

Over the last decade or so scientists and engineers have succeeded in developing a series of software protocols that have enabled computers to actually learn from experience, or data supplied to them. These machine learning techniques, collectively known as Artificial Intelligence or AI employ a trial and error approach with the accuracy of the computer’s guesses improving each time they learn what not to do.

Despite these advances however computers still fail in one aspect of intelligence that only the most advanced of animals possess, self awareness. That is, an ability to understand themselves, to imagine themselves doing something before they actually do it in order to compare the actual results to those they had imagined, which is the beginning of experimentation.

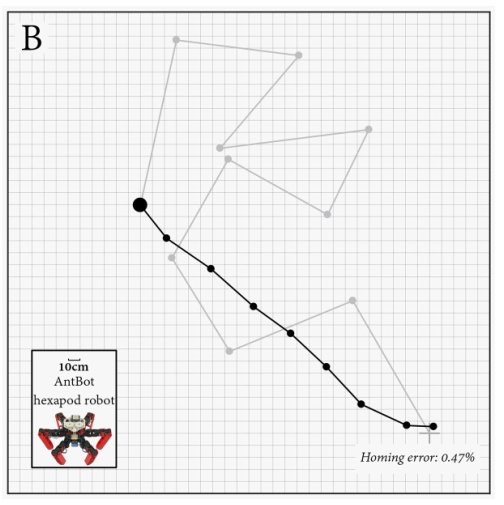

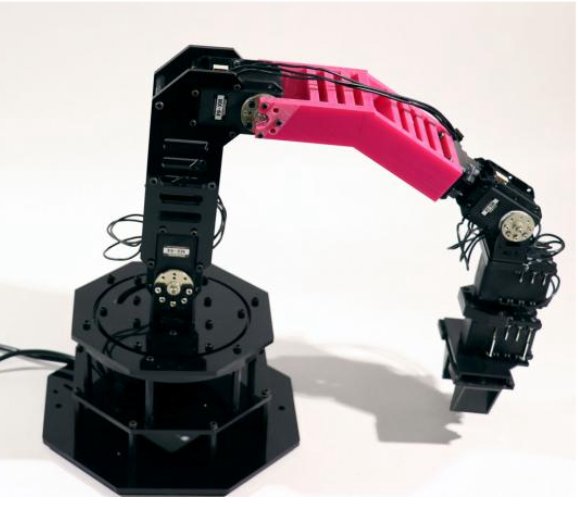

Now researchers at the Creative Machines Lab at Columbia University’s Department of Engineering have taken the first steps in robotic self awareness. Led by Professor Hod Lipson the team used a mechanical arm that possessed 4 degrees of freedom, four joints if you will, see image below. Starting with absolutely no prior knowledge of what the arm can do the computer controlling the arm uses the AI tool known as Deep Learning, along with a lot of trial and error, to determine what its arm was capable of. See image below. In other words the robot is learning about itself!

At first the arm’s movements were random and aimless, “babbling” as described by Professor Lipson. After some 35 hours of playing however the robot had developed an accurate enough model of itself that it could carry out simple ‘pick and place’ tasks with 100% success.

The researchers went on to experiment with the robots ability to detect ‘damage’ to itself. What they did was to replace a part of the mechanical arm with a ‘deformed’ part. See image below. The robot quickly realized that its movements no longer matched up with its model and soon adjusted to a new self model.

Speaking of the study Lipson remarked. “This is perhaps what a newborn child does in its crib, as it learns what it is…While our robot’s ability to imagine itself is still crude compared to humans, we believe that this ability is on the path to machine self-awareness.” Will the combination of AI and self aware technology result in the creation of a true robot straight out of science fiction? We may learn the answer to that question sooner than we think!

So if robots and computers do become self aware could they then ever develop emotions like those humans have? Well a recent news item has actually convinced me that they will some day develop emotions that, while those emotions may be similar to ours they will undoubtedly differ in many details.

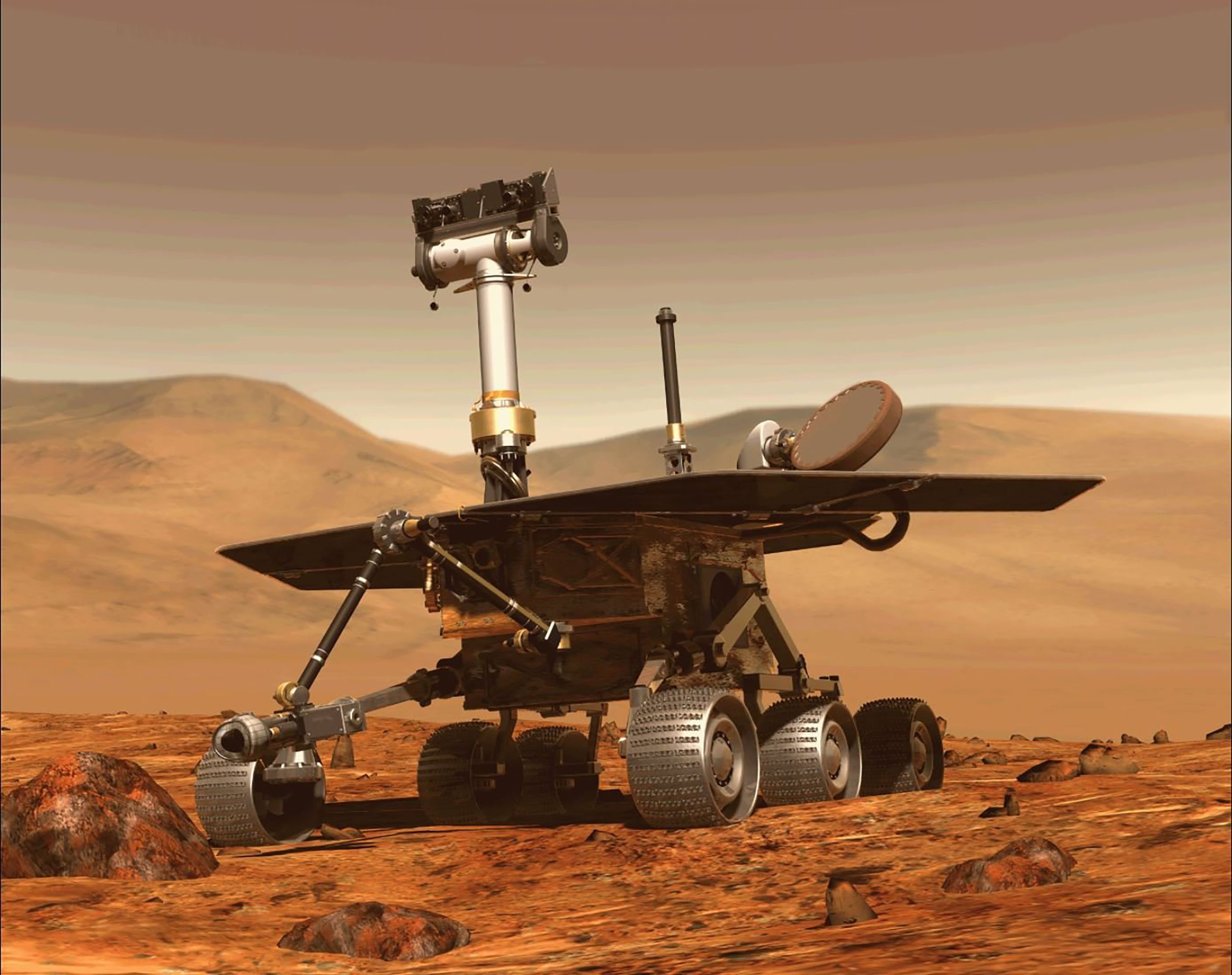

You may have heard that NASA’s Mars rover named Opportunity has officially been declared lost as of 13 February 2019. The space agency’s last contact with Opportunity was actually back on 10 June 2018 when a large dust storm engulfed the rover’s location blocking the sunlight from its solar arrays. NASA’s greatest fear was that the dust might coat the surface of Opportunity’s solar arrays, leaving the rover permanently without power.

After 10 months and over a thousand attempts at trying to reestablish contact with the rover it appears that those worst fears have come to pass and Opportunity is now lost. Even as it declared the mission over NASA released the final message sent back by opportunity as it hunkered down to try to survive the storm.

“My batteries are low and it’s getting dark!”

O’k sure, Opportunity didn’t actually send those words. In fact the last message from the rover was just data, just numbers that indicated its status, that its battery power was low and the level of ambient light was dropping rapidly. The solitary little robot had no clear idea of the danger it was facing; it’s just a robot after all.

Still, you have to admit, it’s hard to say to say those words without feeling just a trace of fear. And as we learn how to give our creations the sensors that can detect situations that are dangerous, as they become more aware of the consequences of those situations to them…well couldn’t that actually be called fear?