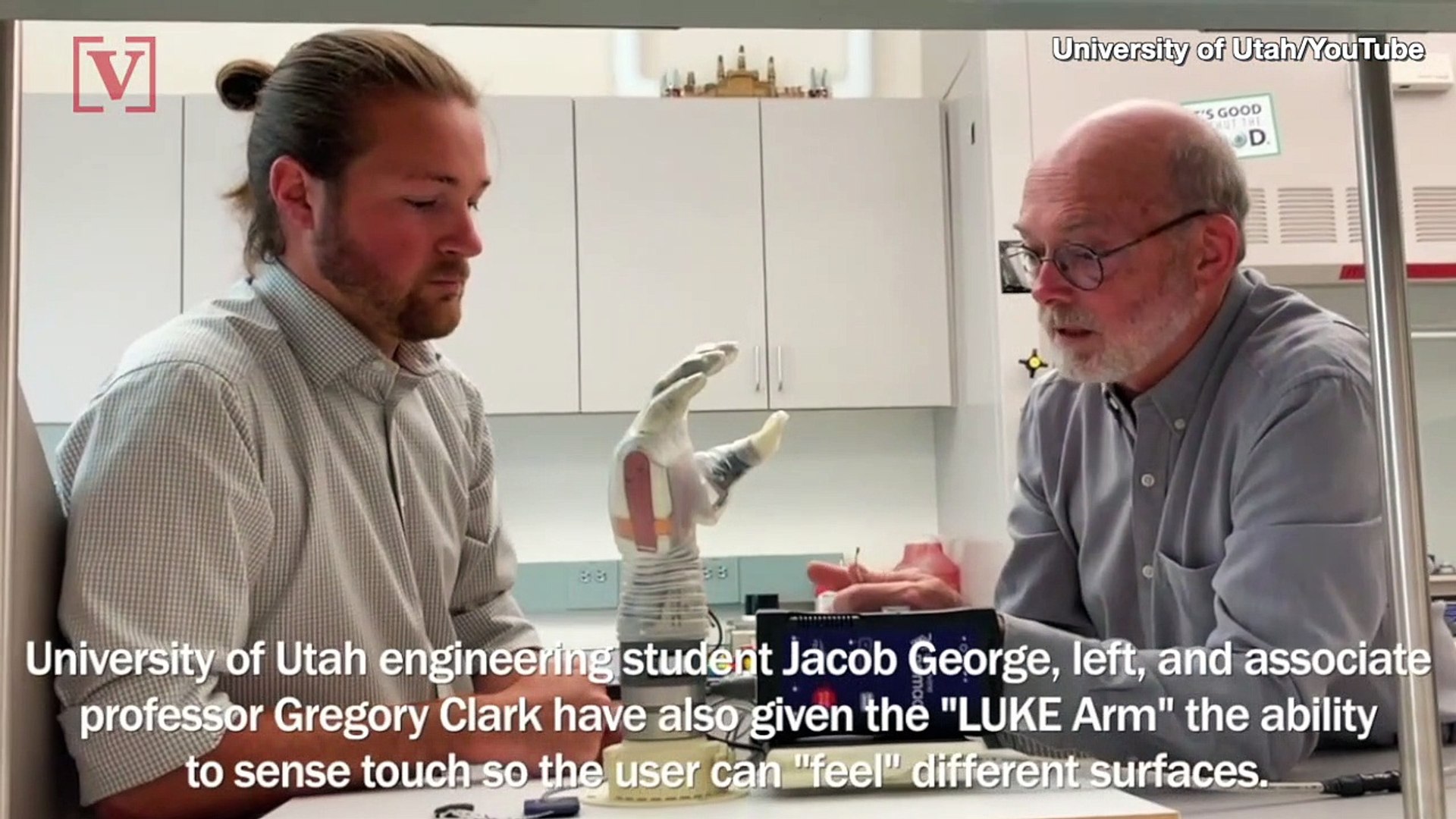

The development of prosthetic limbs has advanced so quickly in the last few years that it really seems as if our technology is catching up to the science fiction ‘bionic’ limbs in movies and TV from 40 or 50 years ago. In fact a new prosthetic arm being developed at the University of Utah is so sophisticated that the researchers have named it the ‘Luke Arm’ for the ‘Star Wars’ character Luke Skywalker who famously lost his arm to Darth Vader and had it replaced with an artificial one.

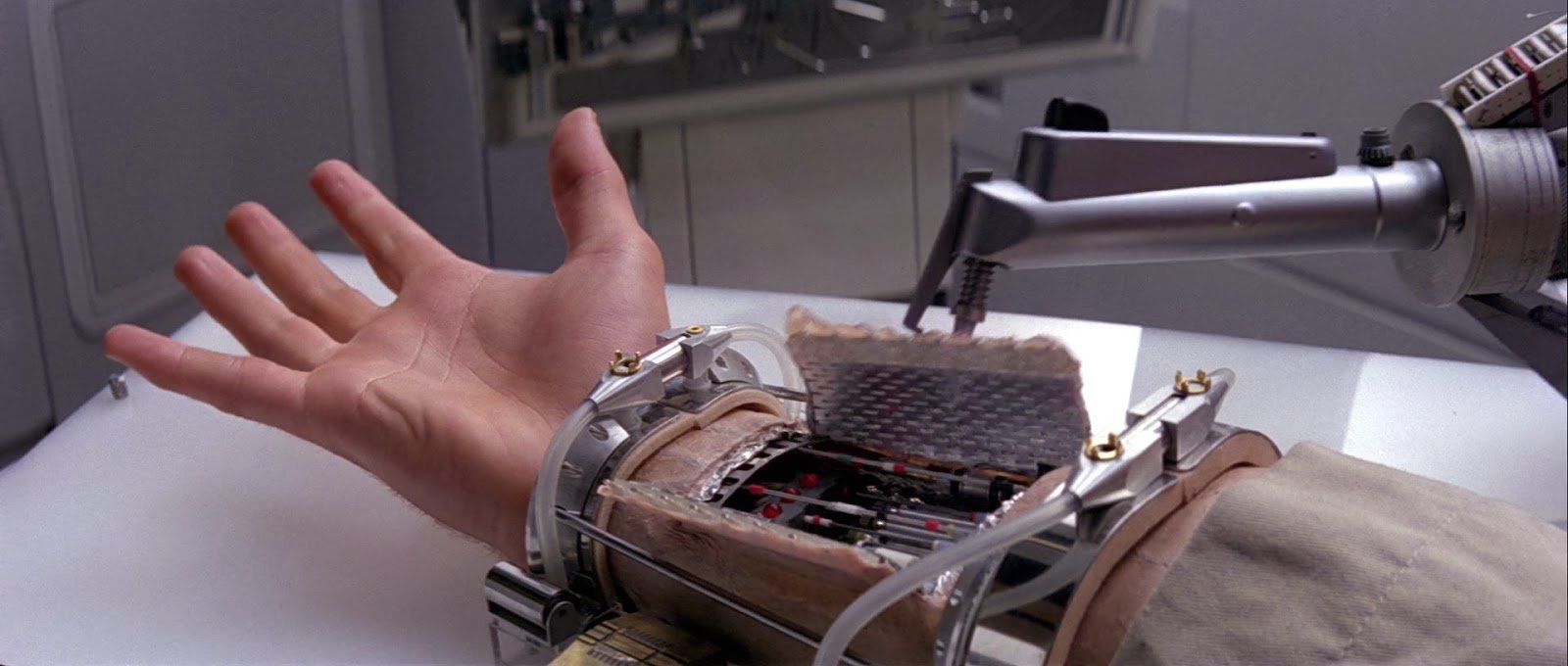

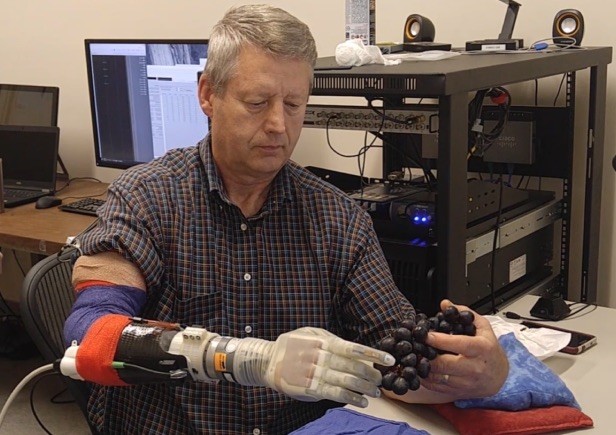

While the prosthetic undergoing testing is designed for people who have had their left arm amputated below the elbow but the developers are confident that the design can be easily adapted to both the right hand and amputations above the elbow. Mechanically the arm is constructed of metal motors overlayed by a clear silicon skin which has had one hundred electronic sensors imbedded in it.

So sophisticated is the prosthetic that not only can it move and grab in response to signals from the wearer’s brain but it can send sensory signals back to the brain that are interpreted as the feelings of touch, heat and even pain. This is accomplished by a system of microelectrodes and wires that are implanted in the wearer’s forearm and connected between the nerve endings of the lost limb and the arm’s one hundred sensors.

The system of microelectrodes has been named the Utah Slanted Electrode Array and was invented by Professor Emeritus Richard A. Normann. The array is connected to an external computer that serves as a translator between the biological and electronic signals.

Patients using the ‘Luke Arm’ have succeeded in performing even delicate activities such as removing a single grape from a bunch or picking up an egg. This is because the sensors in the arm allow the user to actually feel the softness or hardness of the objects they touch. One test subject, Keven Walgomott of West Valley City Utah, even asserted that he could feel the softness of his wife’s hand as he held it with the ‘Luke Arm’.

According to Jacob George, the study main author and a doctoral candidate at the University of Utah. “We changed the way we are sending that information to the brain so that it matches the human body…We’re making more biologically realistic signals.”

The researchers are currently working on a portable version of the Luke arm that will allow in home trials to begin. Testing of the Luke arm could take another few years before FDA approval is granted and the prosthetic becomes commercially available. Nevertheless, the day is coming when artificial limbs will be providing amputees with a quality of life that is nearly equal to the natural ones they have lost.

If you’d like to see the Luke arm in action click on the link below to be taken to a youtube video from the University of Utah. https://www.youtube.com/watch?v=_Xl6rFvuR08

In the story above I mentioned that Luke arm has one hundred sensors implanted with it. Now that may sound like a lot but of course a real arm has thousands of nerve endings giving our brain a much more complete impression of everything that’s happening to that limb. Could an electronic skin, like that on Luke arm, ever be developed that possesses as many sensors as natural skin?

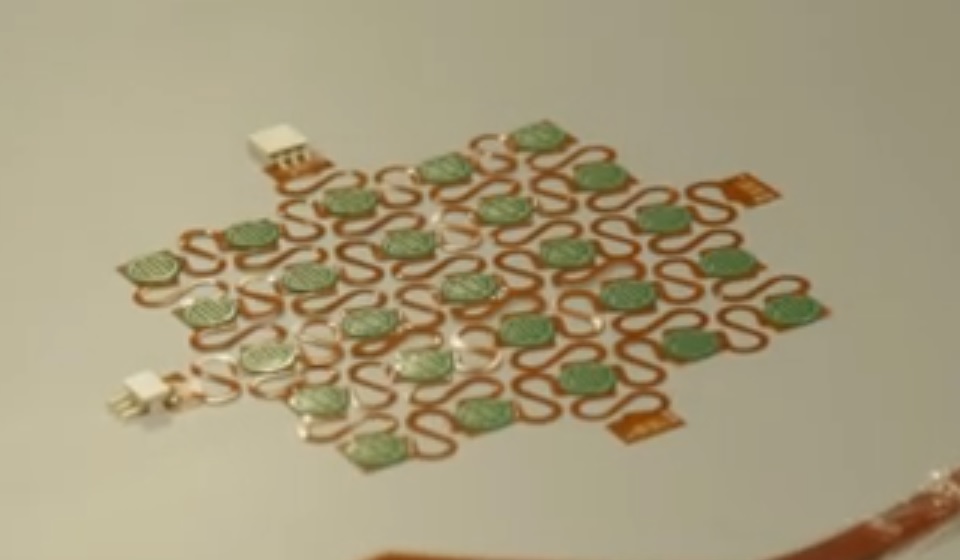

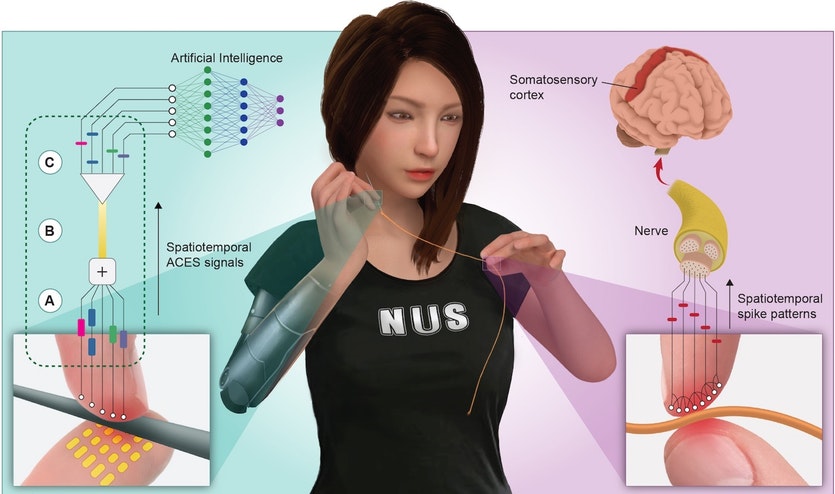

They’re already working on it! Scientists at the Department of Materials Science and Engineering at the National University of Singapore have developed a sampling architecture of sensor arrays that they have named the ‘Asynchronously Coded Electronic Skin’ (ACES). The engineers assert that ACES could work with arrays of up to 10,000 sensors and have even fabricated and tested a 250 sensor array to demonstrate their technique.

Now there are several problems that you’re to need to overcome if you intend to greatly increase the number of sensors in your system. The first is simply data overload, that is more data than a computer, or even our brain can handle. The second is sampling speed. With thousands of sensors waiting to have their data taken, even at a high sampling rate a large faction of a second or more can pass between each time a particular sensor is sampled. That means that an emergency signal, burning heat or a stabbing wound could go unnoticed until real damage is done.

The technique used by the engineers in Singapore is actually modeled on the way the nerves in our body communicate with the brain, an ‘event based’ sampling protocol. Think about it, when you first sit down in a chair you feel a large area of pressure as your skin makes contact, but after a second or so you hardly feel the chair at all. This is because our brain only reacts to changes to the messages from our nerves. The brain only pays attention when our nerves tell it that something, an event, is happening.

The ACES system does much the same thing, only passing on the data of sensors that are measuring changes to their environment. In this way it prevents data overload while at the same time enabling important information to quickly become available to the controlling intelligence. The researchers in Singapore hope that their ACES system will prove to be applicable not only for sensors in prosthetic limbs but also for increasing the ability of Artificial Intelligence systems to sense and manipulate their environment. In that way ACES may be another step forward in shaping the human-machine interface of the future.