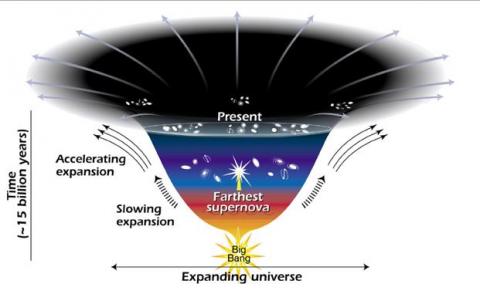

If you ask any astronomer or physicist what is the biggest, the most critical question, the biggest mystery in science today they will immediately reply, “What is dark Energy?” You see our observations tell us that the Universe is expanding while our theories of Gravity tell us that the expansion should be slowing down. But instead what we see is that the expansion of the Universe is accelerating. Something, some pressure is pushing the Universe ever farther apart and that ‘Dark Energy’ actually accounts for some 80-85% of all the energy in the Universe.

The idea that we know so little about 80-85% of the Universe is more than just a bit embarrassing because since Dark Energy is the dominate part of the Universe it will obviously have the dominant effect to the eventual fate of the Universe. To understand why that is so I’m going to take a step back and review the history of the ‘Big Bang Model’ of the Universe.

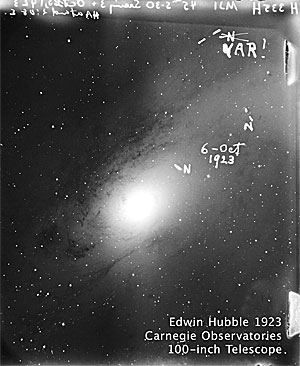

One hundred years ago astronomers thought that the Universe was pretty static, neither expanding nor contracting. Physicists however didn’t like that picture because without something acting to keep the galaxies apart the force of gravity should pull everything together into a ‘Big Crunch’. Everybody was relieved therefore when the astronomer Carl Hubble found that the galaxies were in fact moving away from each other, the Universe was expanding.

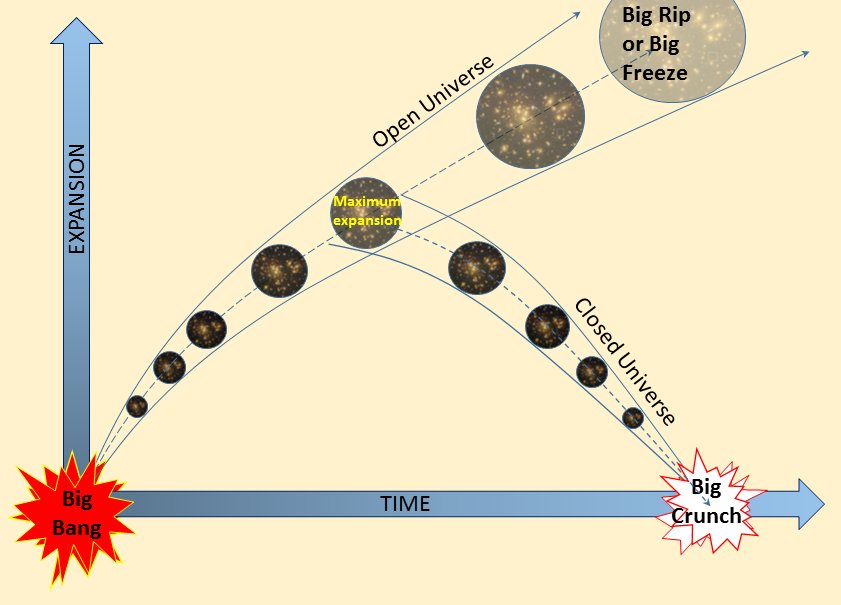

This was the start of the Big Bang Model where billions of years ago an incredibly dense, hot Universe expanded rapidly, cooled and then formed the galaxies we see today. The question then became whether gravity was strong enough to eventually bring the expansion to a halt, or had the Universe reached ‘escape velocity’ and the expansion would go on forever. In the first case once the Universe stopped expanding gravity would begin to cause it to contract leading to a Big Crunch. This was a known as a closed Universe. The alternative was an open Universe that would fly apart forever.

The measurements needed to determine which model was correct were very difficult to make, so difficult in fact that it wasn’t until the 1990s that everyone was shocked to discover that the Universe was actually expanding faster. Something was pushing it apart and for lack of a better name that something was called Dark Energy. So we then had the biggest problem in science, what is Dark Energy?

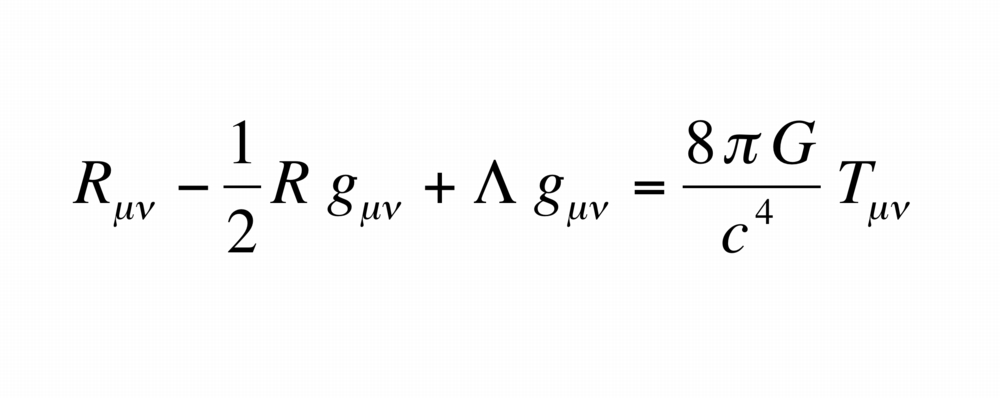

The first thing that scientists would like to learn about Dark Energy is whether or not it is even a constant force or does it’s strength change with time? You see when Einstein formulated the equation of Gravity in his theory of general relativity, see equation below; he realized that mathematically the equation could have a constant added to it. Einstein gave that constant the symbol λ, and he calculated that the effect of that constant would look a lot like the Dark Energy we now see.

Now if Dark Energy is just this ‘cosmological constant’ as Einstein pictured it then the expansion of the Universe will continue forever. If the strength of Dark Energy varies however, maybe even reverses itself to an attraction, then the ultimate fate of the Universe is still unknown.

However the measurements needed to determine whether the strength of Dark Energy varies with time are far more difficult to make than the measurements that discovered it in the first place. Still, astronomers have learned quite a bit in the last 25 years and advances in technology have made their instruments vastly more precise and sophisticated. It is with this improved technology that the Dark Energy Spectroscopic Instrument or DESI has been designed and constructed.

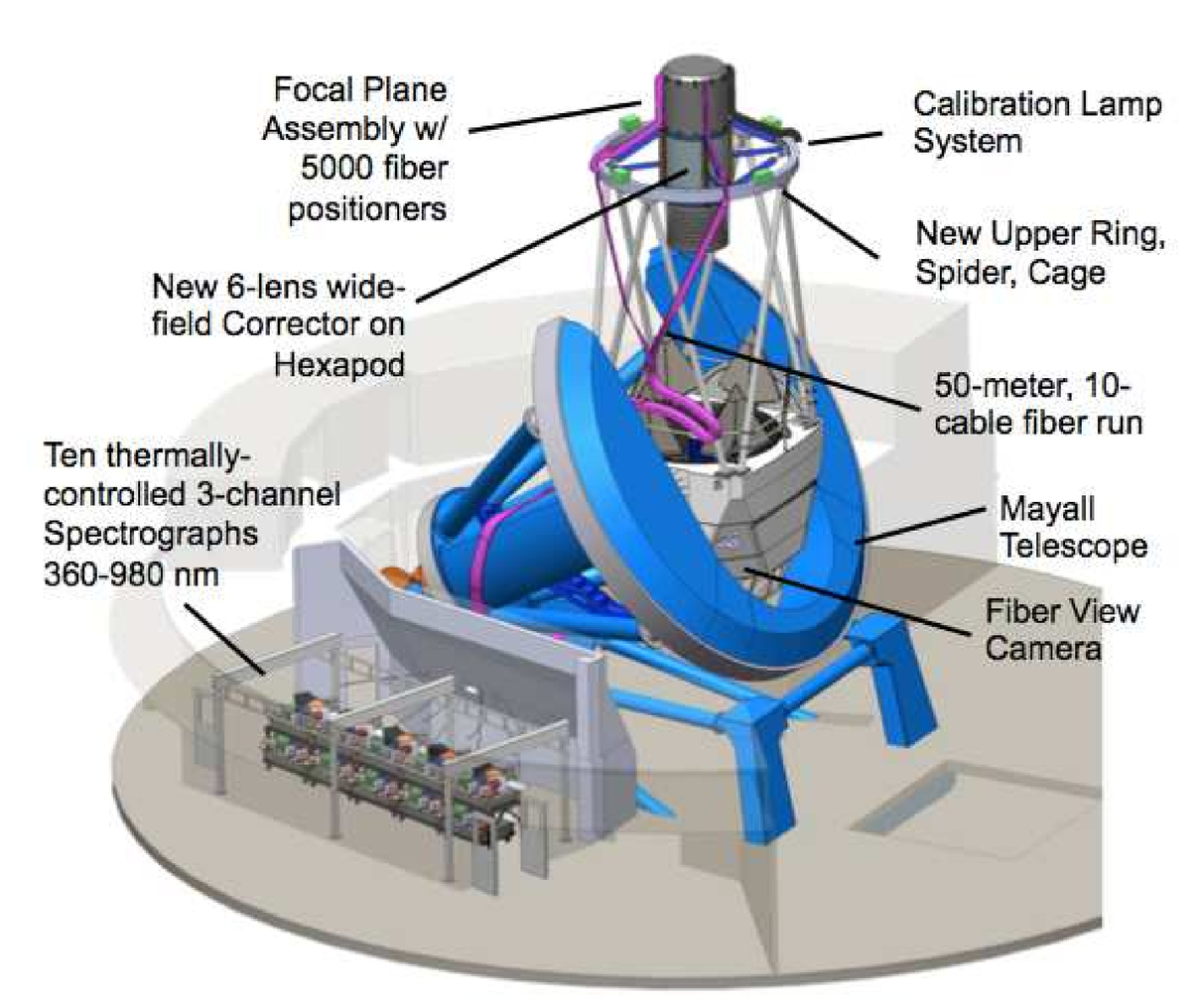

Retrofitted into the Mayall telescope at Kit Peak observatory outside of Tucson Arizona the DESI detector consists of a bundle of 5000 fiber optic cables, each with its own computer controlled positioning mechanism. The fiber optic cables lead to an array of 5000 spectrographs so that the combined telescope / detector will allow astronomers to accurately measure the position, magnitude and redshift of 5000 galaxies at a time.

First light for the DESI instrument came in September of 2019 and the ambitious five-year observation program is now well underway. Once completed the DESI will have obtained the position and redshift of 35 million galaxies allowing scientists to produce a 3D model of a large section of the Universe. This model will then provide the data needed to answer the question of whether the strength of Dark Energy has varied with time.

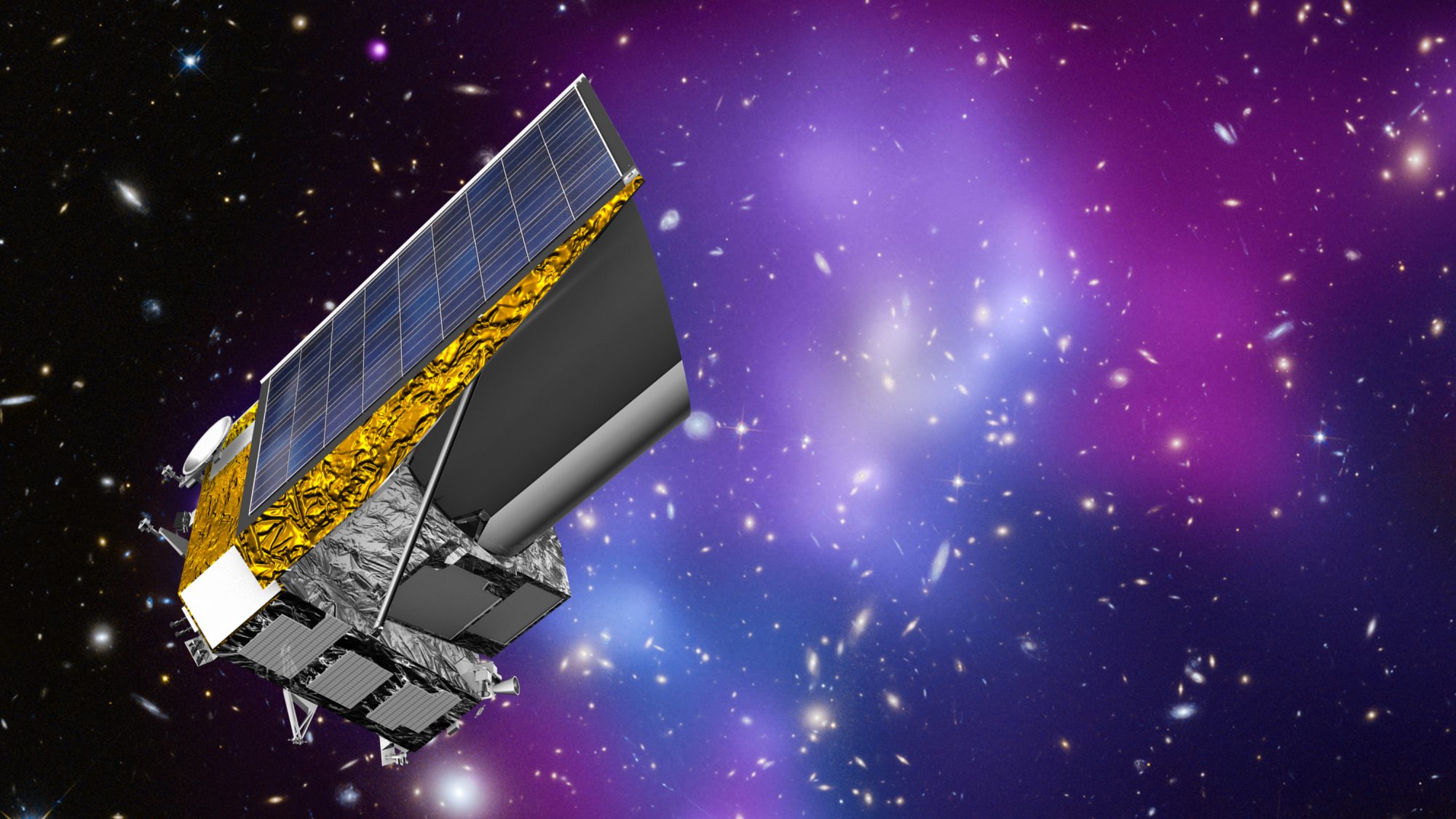

And there are other instruments that will soon be coming online that will compliment the observations of DESI. The 4MOST telescope at the European Southern Observatory is similar to DESI while the Euclid space telescope will also be observing galactic redshift versus distance from orbit.

Now it is true that DESI will only tell physicists how Dark Energy changes with time nevertheless that information will be enough to enable them to eliminate many of the competing theories about its nature. So the theorists are anxiously awaiting the results of DESI and its companions, hoping that they give them direction in their effort to describe the entire Universe.

We’ve learned a great deal in the last 100 years about the structure and evolution of our Universe. I’ve little doubt that the next 100 years will bring just as many exciting discoveries.