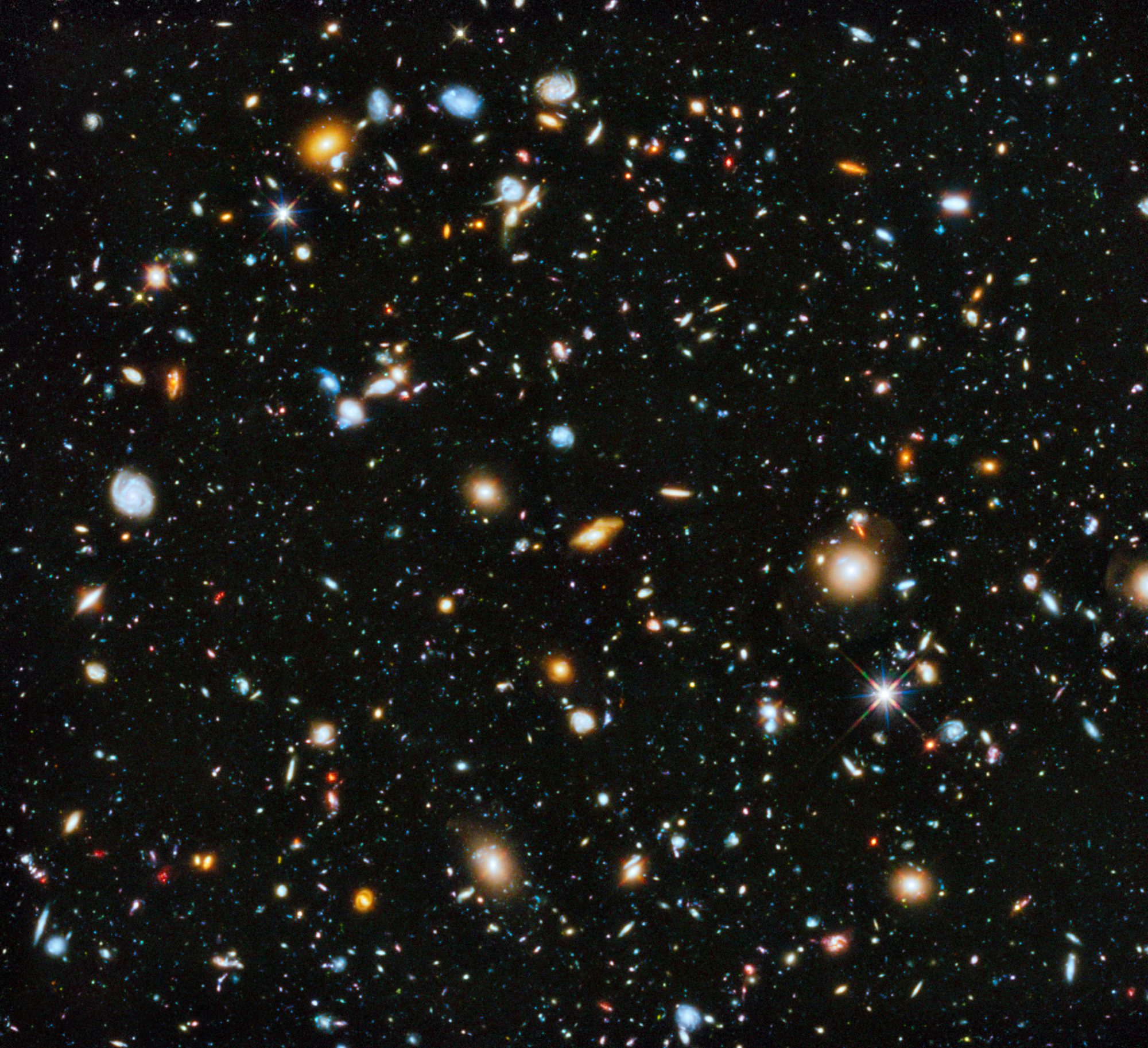

Certainly one of the biggest questions that anyone can ask is, is there life out there? Are there other planets that have life or even intelligent life living on them? At the present time we really have no idea, our exploration of the Universe has only just begun. We have landed robotic probes on only a very few celestial bodies and even on those we have see so little that some form of life could be hiding from us! Still as the famed science fiction author Arthur C. Clarke once asked, the question of whether we are alone in the Universe can have only two answers and either one is awe inspiring.

Many would say that the Universe is so large, there so many places that life could exist and evolve into intelligence that surely there must be some life out there. That position, however reasonable, isn’t evidence. So the study of extraterrestrial life remains a science without a subject, a science of conjecture and hypothesis rather than solid fact.

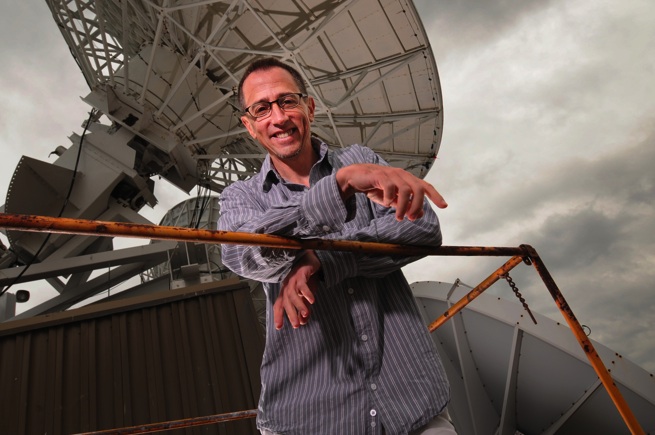

When I was an undergraduate all of that conjecture was summed up in ‘Drake’s Equation’ named for a U.S. astronomer who first explicitly wrote down all of the factors in one equation. Using Drake’s equation it is possible to calculate the number of intelligent species in a galaxy, assuming you have accurate numbers for all of the factors in the equation.

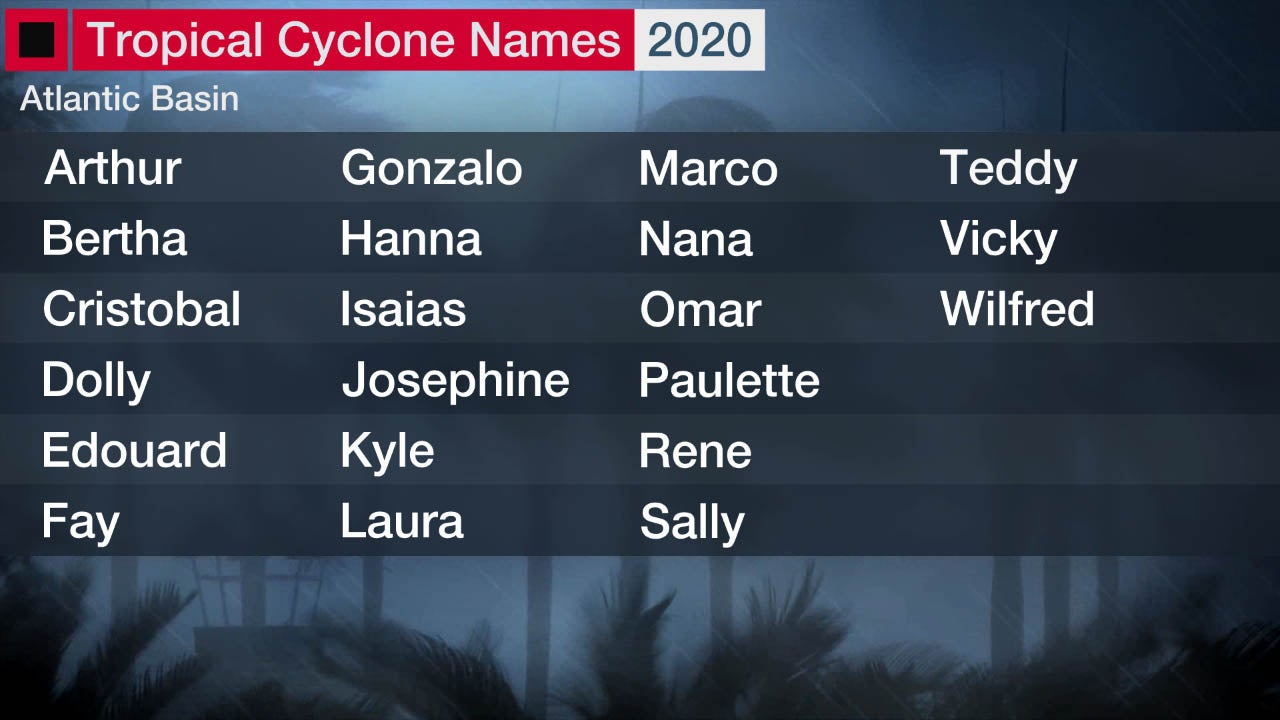

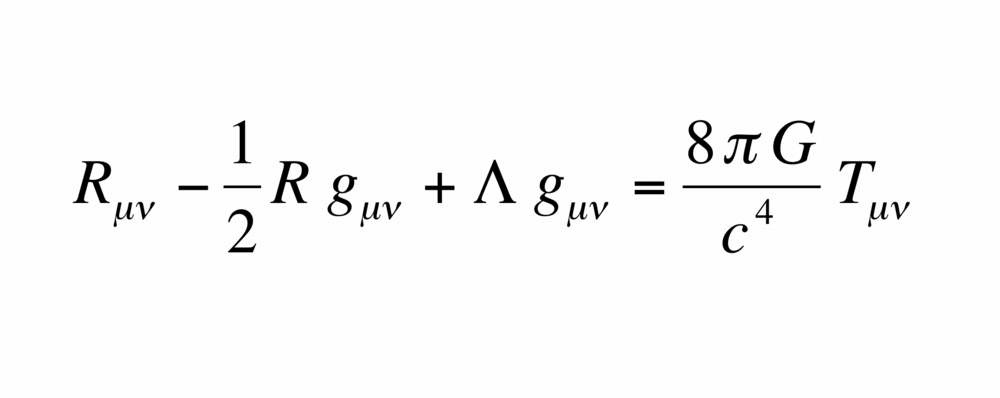

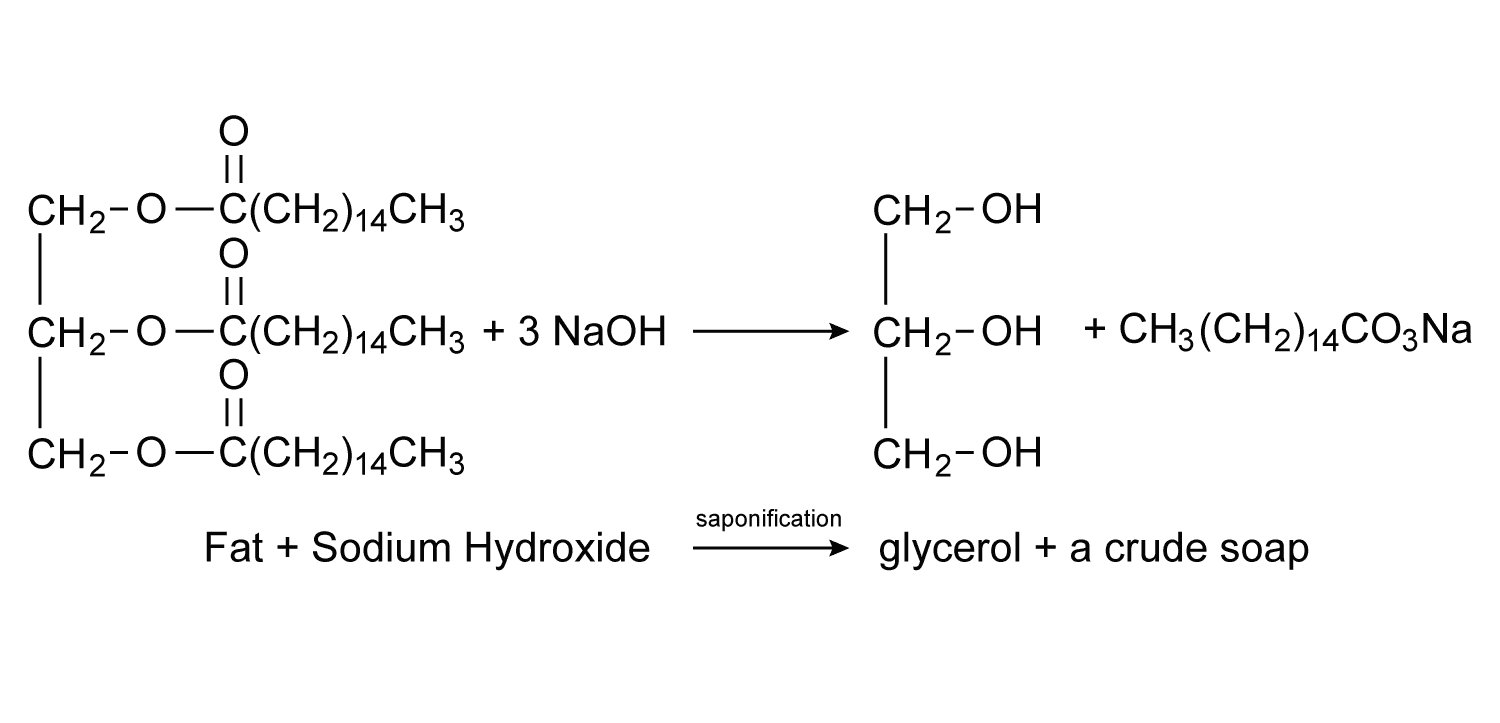

Equation 1

In this equation I is the number of intelligent species in a galaxy, say our own Milky Way. You calculate I by multiplying the factors on the right hand side.

N is the number of stars in that galaxy, about 200 billion for the Milky Way.

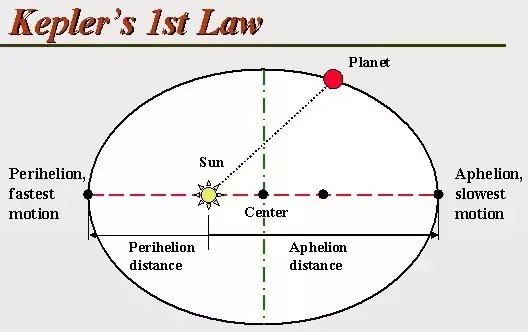

FP is the fraction of those stars that have planets orbiting them. Therefore FP must have a value of between zero and one.

FH is the fraction of planets that orbit in a ‘habitable zone’ around their star; I’ll explain what that means below. Again, FH is somewhere between zero and one.

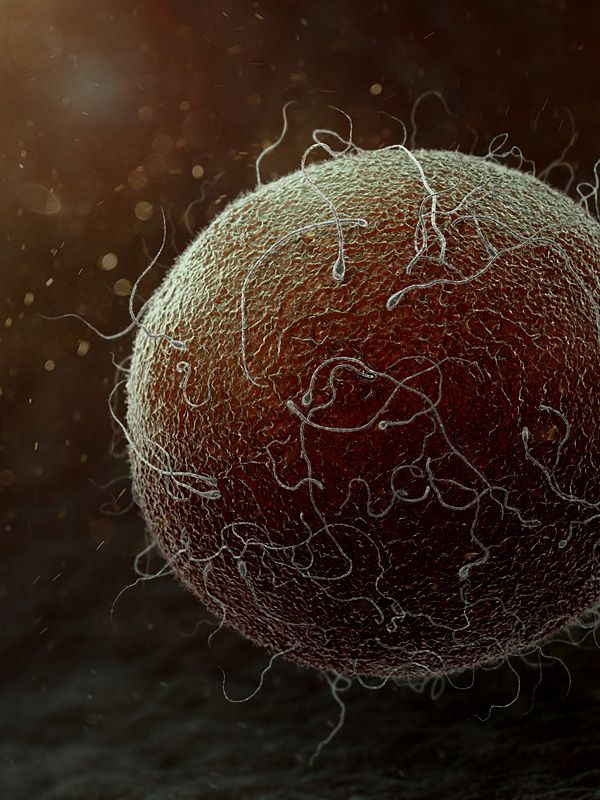

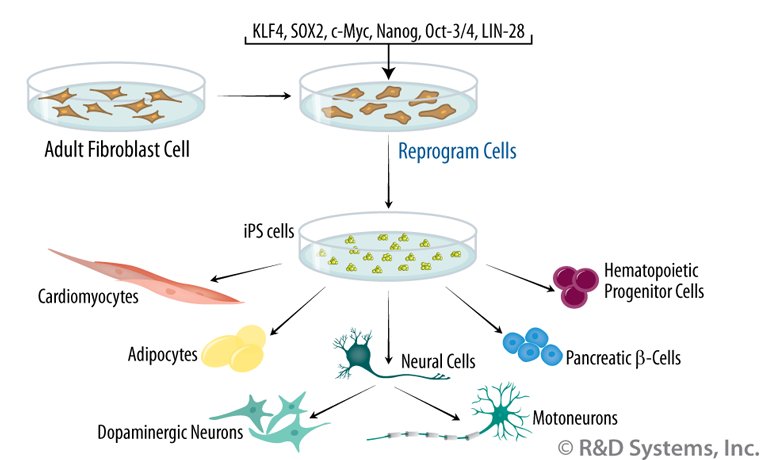

FL is the fraction of habitable planets where life actually arises. Again, zero to one.

FI is the fraction of planets with life on them where intelligence evolves. Zero to one.

Back when I was in college the only factor on the right hand side of Drake’s equation that astronomers had any accurate measurement for was N, the number of stars in the Milky Way. Every other factor was totally unknown so any attempt to actually use the Drake equation was just pure guesswork.

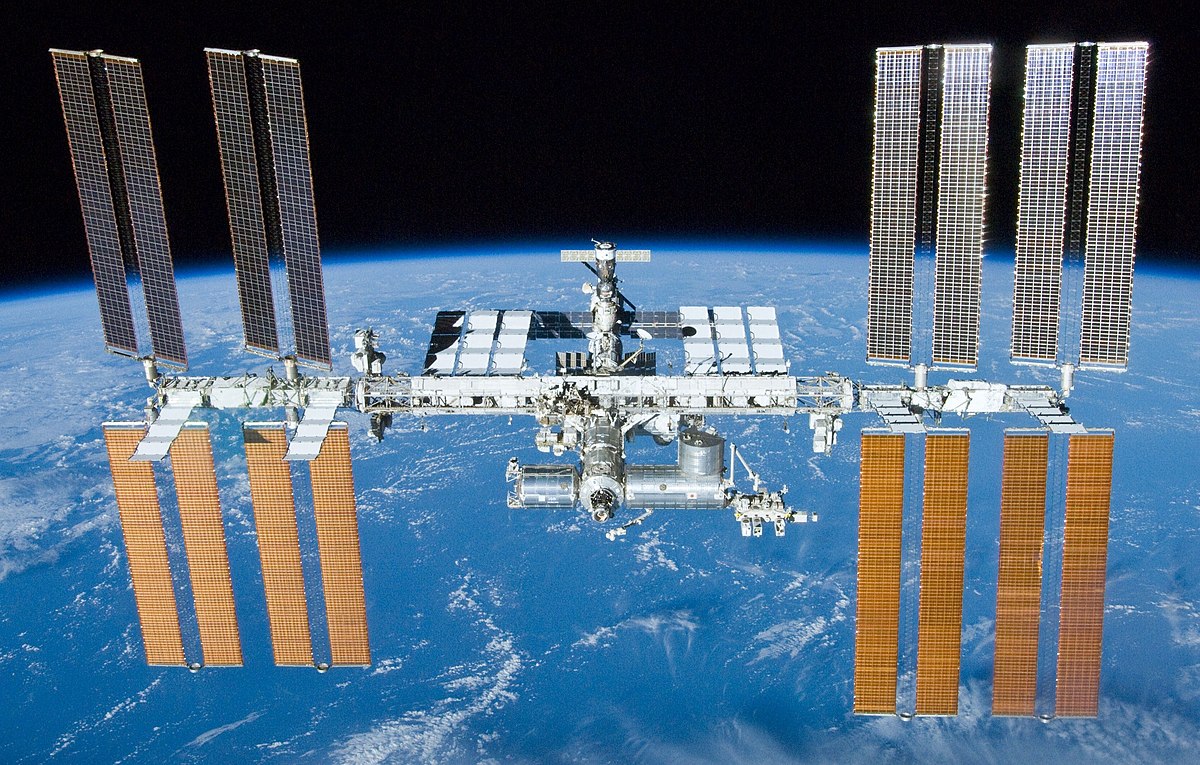

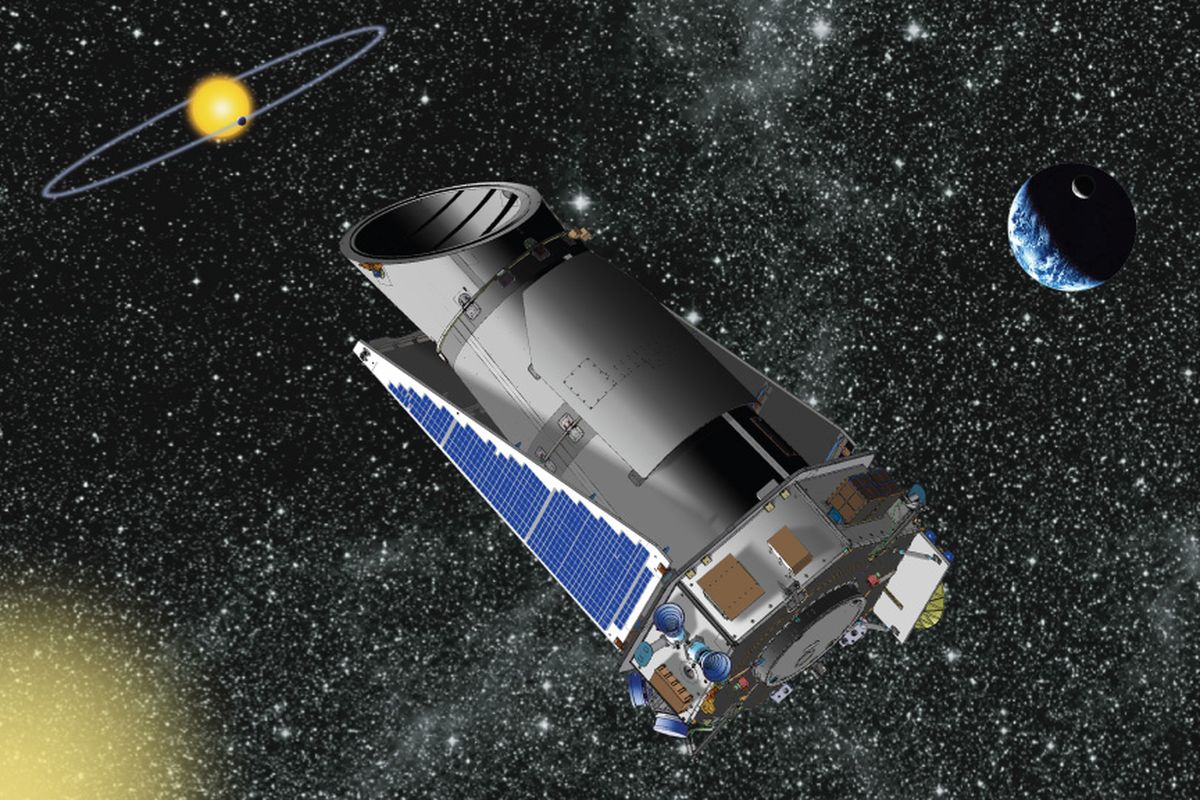

We’ve made some progress since then. In particular thanks to the discoveries made by the Kepler space telescope and other astronomical programs we now know of the existence of thousands of planets outside of our solar system. Because of these discoveries we can now say with reasonable confidence that at least half of all stars must have planets orbiting them, perhaps 90% or even more. So if even half of the Milky Way’s 200 billion stars have planets, then there are an awful lot of planets out there.

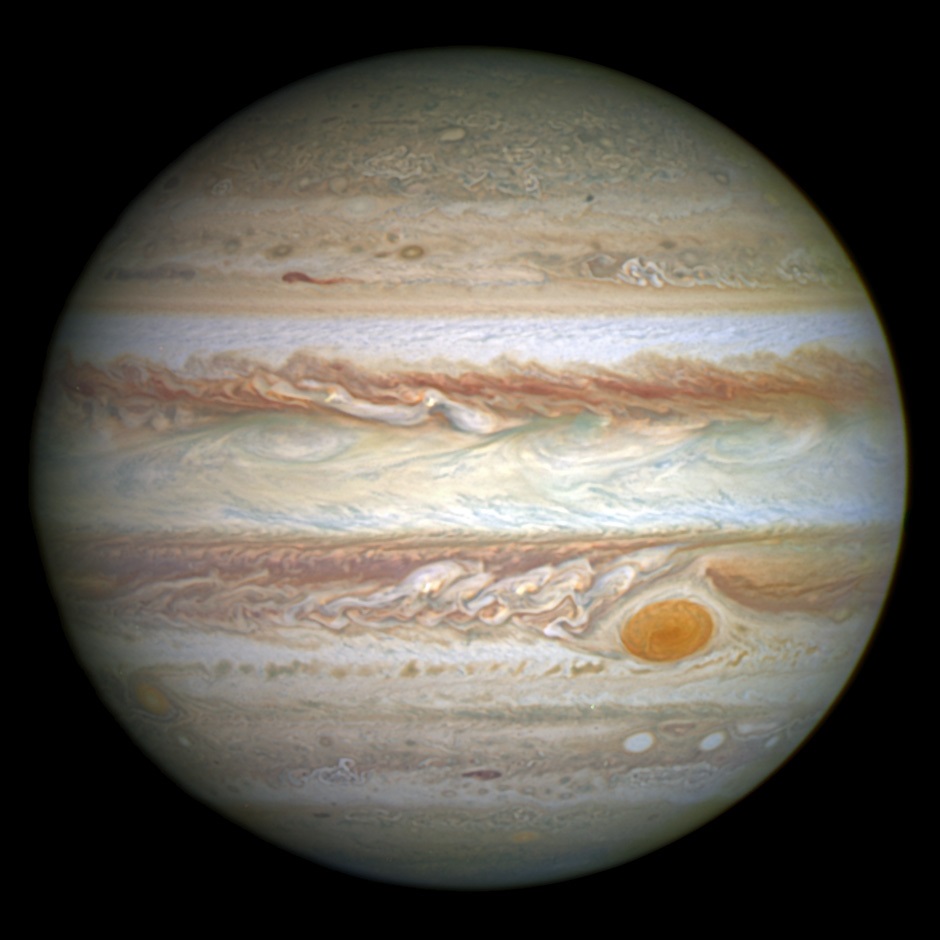

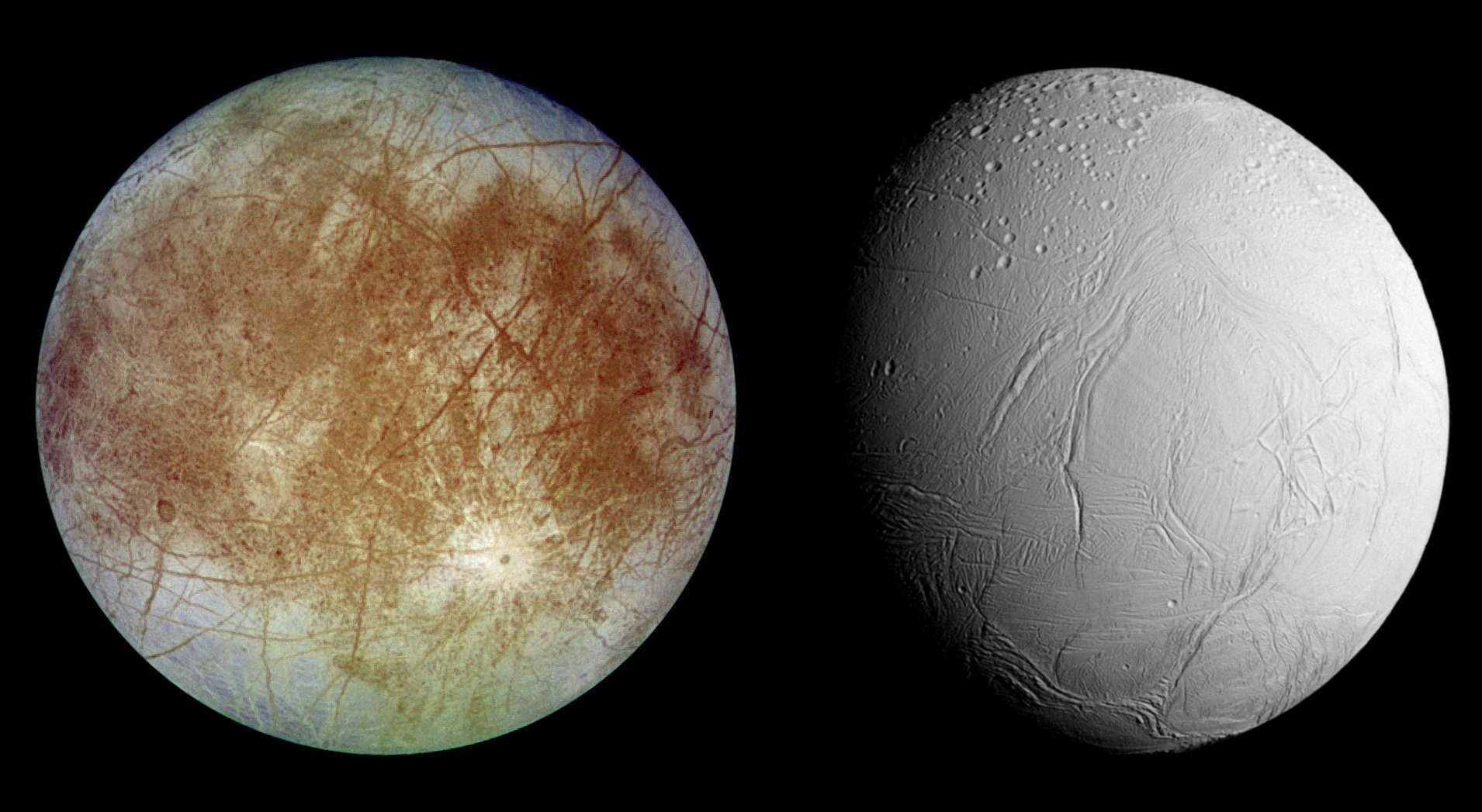

We’ve also made some progress with FH, the fraction of planets that could be habitable for life. Thirty to forty years ago ‘habitable’ would have meant liquid water on the planet’s surface, which in our solar system meant only Earth, one out of eight planets. However our space probes to the outer planets have discovered that Mars once had oceans and maybe still has water beneath its surface. Also, data from other probes have raised the possibility that Europa and Enceladus, the moons of Jupiter and Saturn respectively, may have large oceans of liquid water beneath their icy surfaces. That means that our solar system might actually have at least four habitable bodies, not just the Earth. So it appears that FH might actually be larger than we thought just a few decades ago.

That leaves us with just the last two factors, FL that fraction of planets with a habitable environment that possess life and FI the fraction of planets with life where intelligence evolves. The only way to get an accurate measurement for these two numbers would be to closely study a few hundred or more habitable planets or moons and just see how many have developed life and how many go on to evolve intelligence.

We can’t do that however; it will probably take decades for our space technology to even find life on Mars or Europa if it’s there. The only real example we have to study is Earth. Can we learn anything about FL and FI from studying the history of life on here?

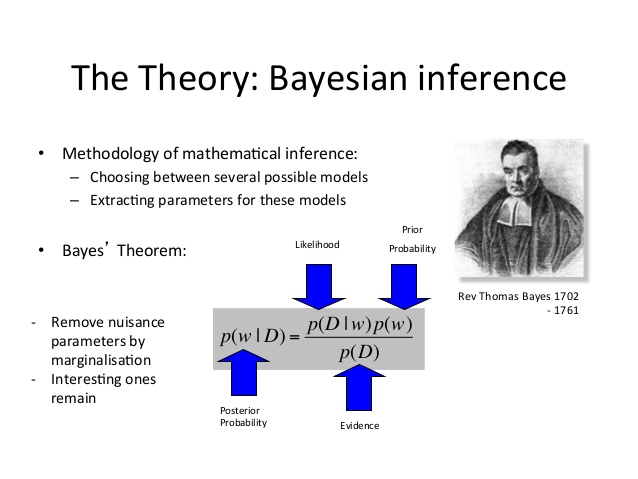

A new study says that we can. Authored by David Kipping of Columbia University’s Department of Astronomy “An objective Bayesian analysis of life’s early start and our late arrival” uses probability mathematics to calculate values for FL and FI that would best simulate life’s history here on Earth.

You see we know that our planet is about 4.5 billion years old and there is growing evidence that life was well established here as far back as 4 billion years ago. Indeed it looks as though life began on Earth as soon as its surface had cooled enough for life to exist. On the other hand complex, multi-cellular life took 4 billion years to evolve and even then intelligence took another half billion years.

So what Doctor Kipping did was to develop a computer program that would vary FL and FI across all of their possible values and see which values succeeded in reproducing life’s history here on Earth. The result that Dr. Kipping obtained is that while life itself could be quite common in the Universe, intelligence is very rare. Mathematically what he found was that FL is close to one but FI is very, very close to zero. Thousands of planets may have life on them for every one that possesses an intelligent species.

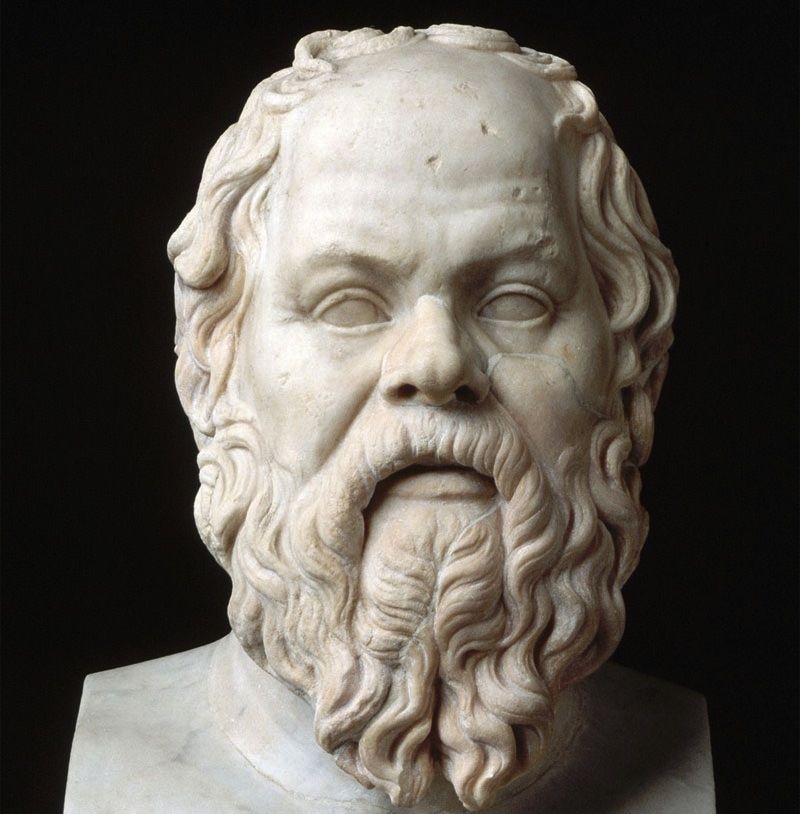

I have to admit that I agree with Dr. Kipping. The more we learn about life at the biochemical level the more it seems to be something that will inevitably happen at least once on any planet that it can happen on, and once it happens it spreads everywhere on that planet. However intelligence is so complex, so dependent on the twists and turns of evolution that intellect, mind may be the rarest thing in the Universe.

Maybe we should take a lesson from Dr. Kipping’s work. If intelligence is the rarest, most valuable thing in the Universe it might behoove us to use ours a little more often, to appreciate it a little more, to realize that it is all that really separates us from… just biochemistry.