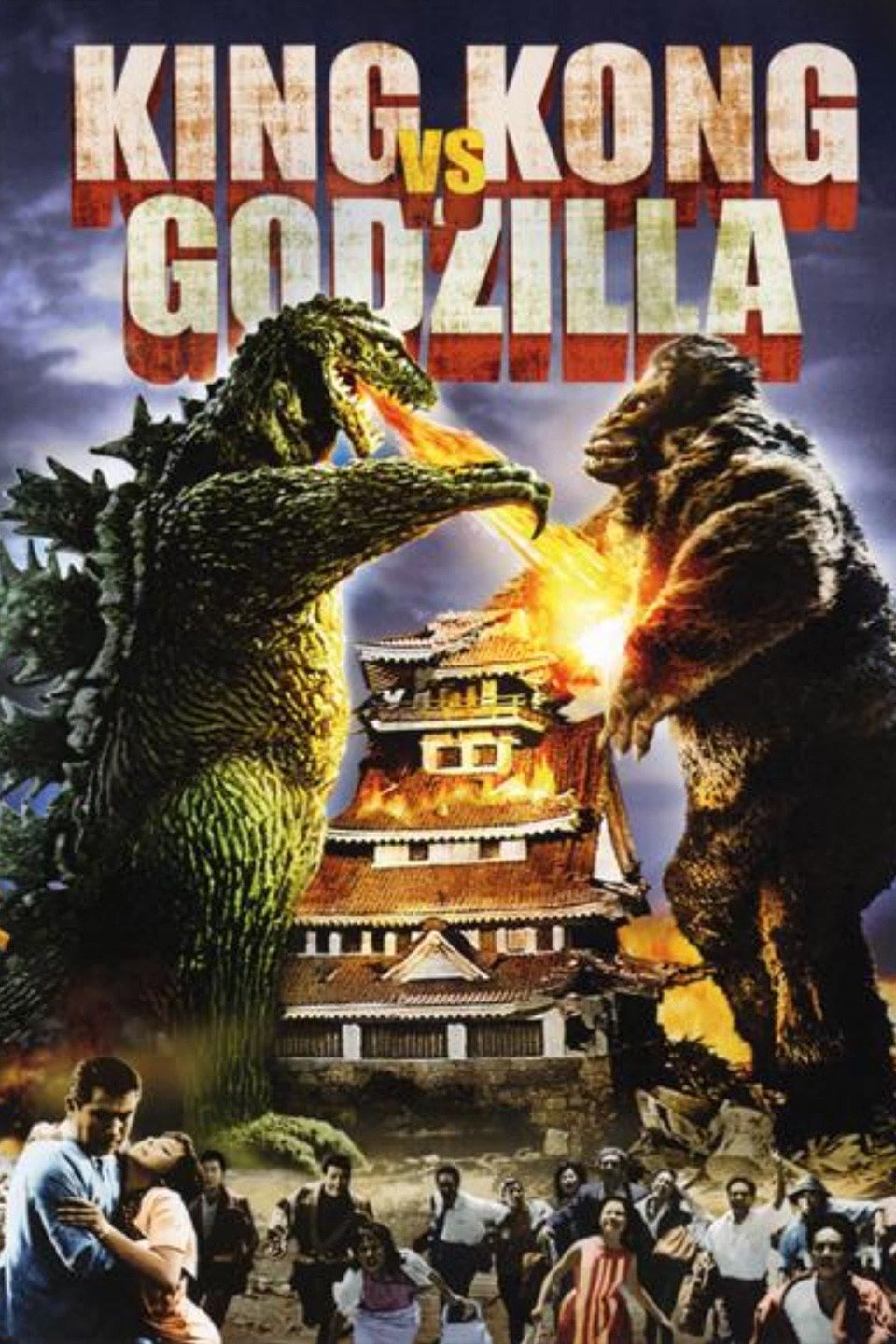

When I was a kid I have to admit I dragged my dad to some pretty rotten movies. Anything with a dinosaur or something that resembled a dinosaur I had to see. It’s a measure of how bad some of those movies were that 1962’s ‘King Kong versus Godzilla’ was by no means the worse. In fact I think my dad was kinda looking forward to that one because he mentioned several times how much he had enjoyed seeing the original ‘King Kong’ when he was a kid.

At one time or another I’ve seen every Godzilla movie, even the really bad 70s ones like Godzilla versus Megalon, and every Kong movie including Dino de Laurentiis’s 1976 redo of ‘King Kong’. It’s worth pointing out that de Laurentiis’s Kong cost more than then times as much as ‘Godzilla versus Megalon’ but stank just as bad.

So as you might guess the new Warner Brothers release ‘Godzilla versus Kong’ was a must see for me, Covid-19 or no Covid-19. O’k I’ll be honest, we signed up for HBO-Max in order to see it in our home. Still, I did get to see it!

Now let’s get one thing straight from the start, nobody goes to see a monster movie because they like good acting or a well thought out plot. You go to see huge creatures demolishing entire cities and wiping out whole armies. That used to mean guys in rubber monster suits stepping on toy tanks and swatting toy planes out of the air while smashing miniature buildings but today it’s all done with CGI. That makes no difference however; a monster movie is all about monsters being monstrous.

In that respect ‘Godzilla versus Kong’ delivers. In addition to two big fights between the title characters there are several flights with lesser monsters all leading up to a big finale where the two good monsters, of course both Kong and Godzilla are really good guys, take on the real bad guy. I’ll leave it at that, no spoilers here.

As in most monster movies the plot that the human actors are following is all just to set up for the big fights and again ‘Godzilla versus Kong is no exception. The two plots, it’s actually difficult to determine which is the main plot and which is subplot, are both silly and contrived but they do successfully integrate at the climax to bring the main monsters together for the big fight. The actors are not expected to display any real depth of emotion in their acting and for the most part live up to that expectation.

The effects, and really a monster movie is all about the effects, are quite good but honestly I think we’ve reached a point where CGI just isn’t going to get dramatically better. The CGI in “Godzilla versus Kong’ is no better or worse than in ‘Avengers: Endgame.’ Like any superhero or sci-fi movie the end credits of ‘Godzilla versus Kong’ list an army of computer artists who produced most of what you see in the movie but I really don’t think that adding any more artists, or any more computing power, is going to significantly improve the product. It looks to me like CGI has hit the law of diminishing returns.

You may have heard that Warner Brothers is hoping to turn their monsters, known in Japan as Kaiju, into a cinematic universe to compete with Marvel’s superhero MCU. This universe began with 2014’s “Godzilla’ before going on to 2017’s “Kong of Skull Island’ and 2019’s ‘Godzilla, King of the Monsters’ but I don’t know how much further this monsterverse, or would that be Godizillaverse, can go. After three Godzilla and two Kong movies they need some other monster to step up for a change of pace but let’s be honest there’s no other monster who can carry a movie by themselves. Don’t get me started on Rodan. If either Godzilla or Kong has to appear in every movie I have to ask how many more they can do?

At the risk of being presumptuous I do have a suggestion. If Warner Brothers could obtain the movie rights to the works of H. P. Lovecraft then they could do a film version of ‘The Call of Cthulhu’. The follow up to that would then be ‘Godzilla versus Cthulhu’, integrating Lovecraft’s ‘Ye Olde Ones’ into the Japanese Kaiju creatures. Sounds good to me!

Still I’ve no doubt that it won’t be long before there’s another Godzilla movie. After all the big green guy first premiered back in 1954 as the Japanese film ‘Gojira’ and ‘Godzilla versus Kong’ is his 36th feature film. And when there another Godzilla movie you can be certain I’ll be there to see it.