There have been several exciting discoveries from ancient sites around the world. So rather than wasting any time let’s just get to it.

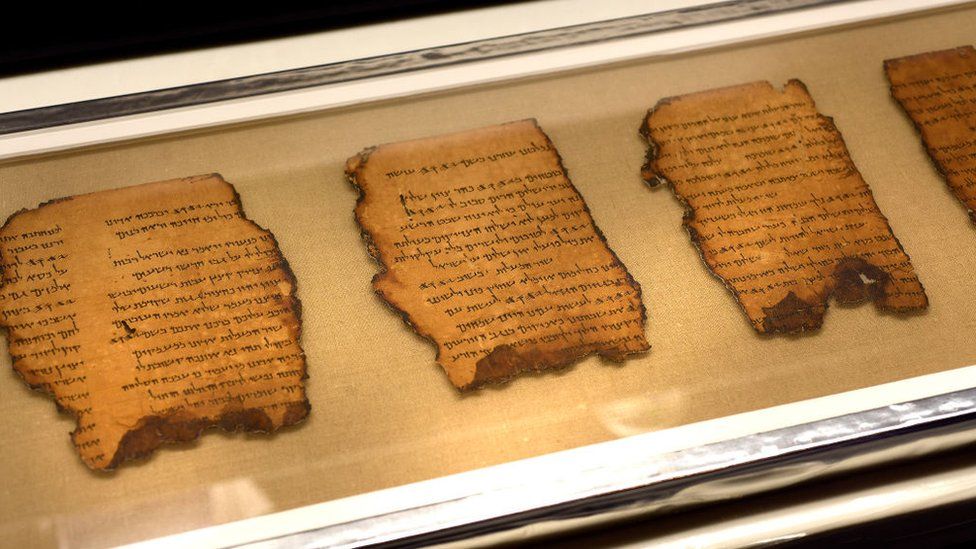

I’ll start today with the discovery that’s gotten the greatest amount of press coverage, the unearthing of new fragments of the Dead Sea Scrolls. Now everybody knows that the Dead Sea Scrolls are fragments of writings from the Jewish Scriptures, the Christian Olde Testament that have been dated from about the second century BCE to the end of the first century CE. The writings were discovered between the years 1947 and 1956 in a series of eleven caves not far from the shoreline of the Dead Sea in the Khirbet Qumran region of Israel. The writings consist entirely of Jewish religious texts and while most are versions of well-known books from the Olde Testament some of the texts are of works that were completely unknown before their discovery.

No one knows exactly who wrote the Dead Sea Scrolls nor how they came to be hidden away in deserted caves so far from any habitation. One of the two main theories is that a small religious sect known from the works of the Jewish historian Josephus as the Essenes wrote the manuscripts at a nearby archaeological site. The problem is that there is no evidence to connect the small settlement to either the scrolls or the Essenes. The other theory is that before the Romans captured the city of Jerusalem and ended a Jewish revolt in 70 CE a group of Jewish patriots managed to escape with a part of the Temple library that they hid in the caves to keep them from being destroyed by the Romans. Again the evidence for this theory is only circumstantial.

Every since the discovery of the writings seventy years ago the caves along the Dead Sea have been explored and studied by archaeologists in their desire to not only solve the riddle of who put the scrolls there but also in the hope of finding some more fragments. And in fact the Israel Antiquities Authority has recently announced the discovery of dozens of new fragments that when pieced together were found to come from the books of Zechariah and Nahum in the so-called minor prophets of Jewish scripture.

The fragments were found in a cave colourfully named the cave of horrors, not because of any grisly find that had been made there but because of the difficulty of reaching the site. Really, at 80m from the cliff top and at the intersection of two ravines the cave is so hard to reach with modern rappelling equipment that it’s difficult to imagine how first century Jewish patriots fleeing the Romans managed to ever reach the cave’s entrance.

Although the latest writings are plainly fragments of Jewish scripture the language they are written in is Greek showing how important that language was in the Middle East at that time. (In fact did you know that all quotations from the Olde Testament that occur in the New Testament are from a Greek translation of the Jewish scriptures known as the Septuagint.) Also, even though only a few lines of text are able to be reconstructed there are noticeable differences in wording between the new fragments and the same lines as printed in your bible.

As hard as it can be to interpret writings from thousands of years ago most archaeological sites contain no writing, making it infinitely more difficult to understand exactly what happened there. A 6,200-year-old Neolithic site that has been unearthed near the town of Potocani in what is now the country of Croatia is a prime example of this.

Discovered in 2007 the site is simply a mass grave, the remains of 41 people, men, women and children aged from about 2 to 50 years old were crammed into a pit only 2 meters in diameter and one meter deep. This was not a quick burial for the victims of some plague however, nearly every skull examined by archaeologists from the University of Zagreb showed at least one blunt force wound at the back of the head. Just as telling, there were no sign of defensive wounds anywhere on the arms or body. These people either did not, or could not fight back against their attackers. The dead in the pit were all murder victims.

At first the scientists thought that they might have stumbled upon a massacre from the 20th century, a Nazi execution from WW2 or ethnic cleansing during the breakup of Yugoslavia. The lack of any metal objects like belt buckles or buttons made that seem unlikely however. It was only after carbon 14 dating that the archaeologists realized just how long ago the massacre had occurred.

In order to gain some clue as to why these 41 people had been killed it was decided carry out DNA analysis of the remains. Surprisingly it turned out that while the victims were related to a degree, they were not closely related, in other words they were not all members of the same family. So this atrocity was not a family or clan being wiped out by a rival family or clan. As far as the evidence showed this was a random sample of people living in the area 6,200 years ago who were murdered for some unknown reason.

We probably will never know the exact motive for these killings but the researchers speculate that climate change, a long period of either drought or flooding may have led to a squabble over limited resources and these victims were simply the losers of that conflict. In other words the events of 6,200 years ago may not have been all that different from the ideological killings of the 20th century.

For my final story I’d like to just mention a somewhat less violent, more pleasant story. Greek archaeologists working near the ancient city of Olympia, site of the Olympic games in ancient times, have unearthed a 2,500-year-old bronze idol in the shape of a bull. The small statue was found quite by chance, as an observant archaeologist happened to notice one of the horns sticking out of the ground after a heavy rainfall.

The researchers believe that the figurine was probably an offering to the god Zeus not only because of the nearby temple to the chief of the gods but also because the bull was a well known sacred symbol of Zeus, the story of the rape of Europa where Zeus disguised himself as a bull comes to mind.

The figurine has been taken to a labouratory for preservation, as have the fragments of the Dead Sea Scrolls and the remains of the Croatian dead. There they will be studied in the hope that some clue or clues may be discovered to help archaeologists tell us more of the stories of our ancestors.