As I have mentioned on occasion in these posts I live in the city of Philadelphia, Pennsylvania in the eastern United States. I consider that to be rather fortunate due to the growing problems caused by Global Warming. It’s true that here in Philly our summers are getting a bit hotter and dryer but the most noticeable change in our weather has been the milder winters, which I’m not going to complain about.

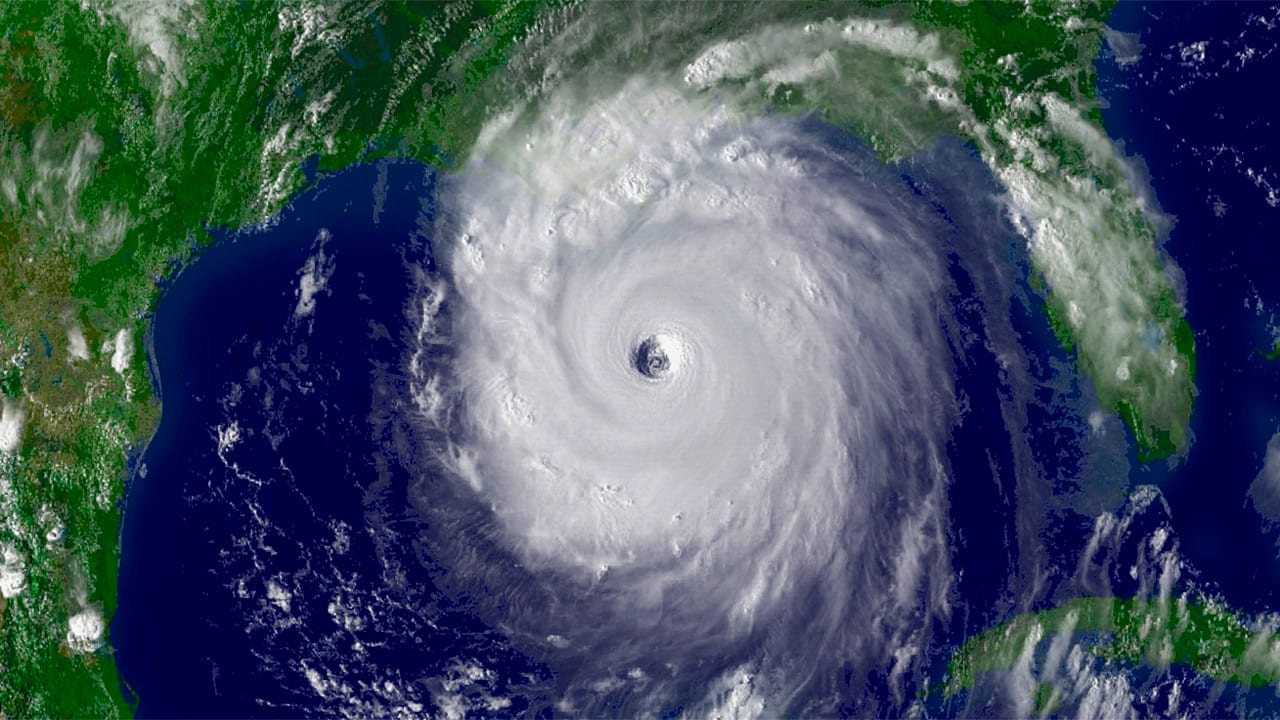

In Philadelphia we don’t have to worry about the increasing threat of hurricanes like the people in Miami or New Orleans do. Nor have we been subjected to the ever greater number of tornadoes like the people living in the Great Plains or Deep South have had to. And while each of the past few summers have been quite dry, we are in a slight drought situation right now, it’s nothing like out west where rivers and reservoirs are at historic lows and water shortages are starting to impact everyday life for millions of people. No, all in all Philadelphia has been lucky, the weather here has hardly been showing the effects of global warming.

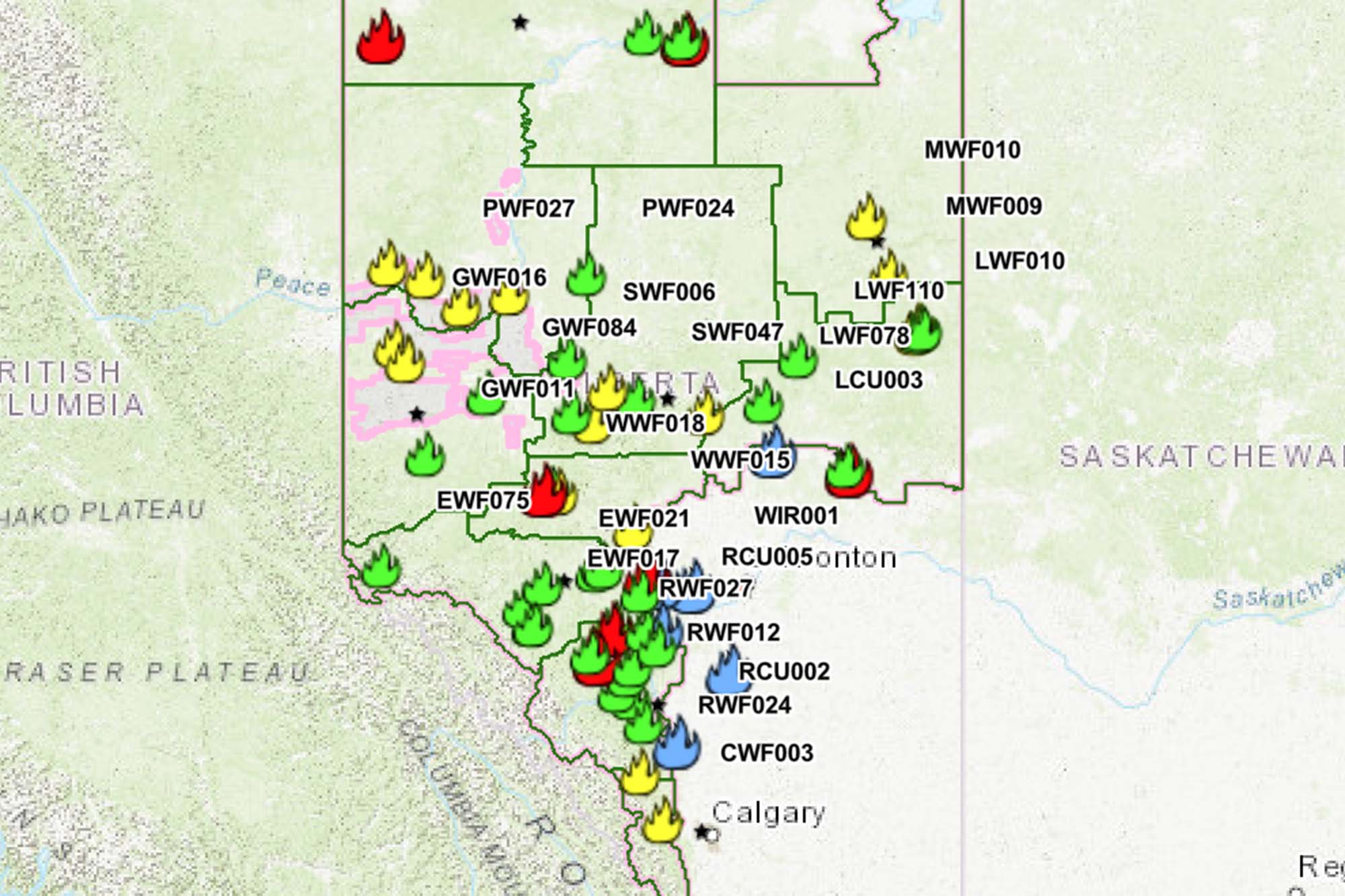

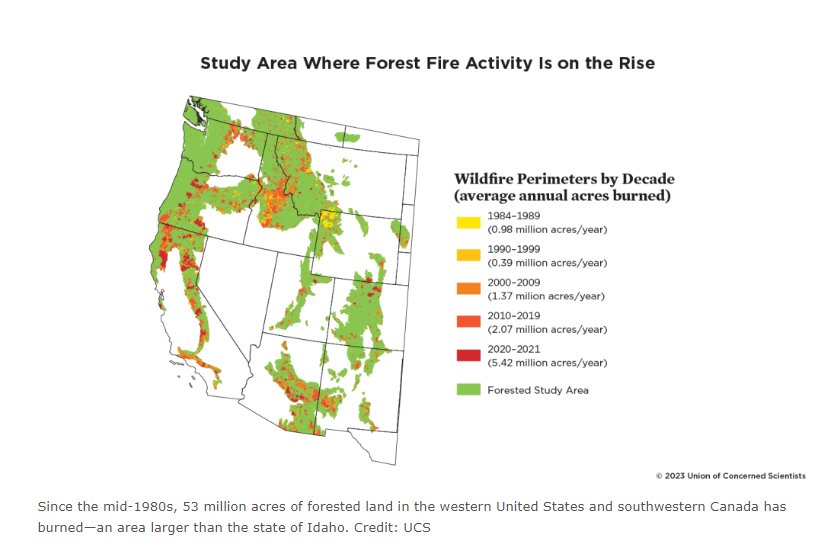

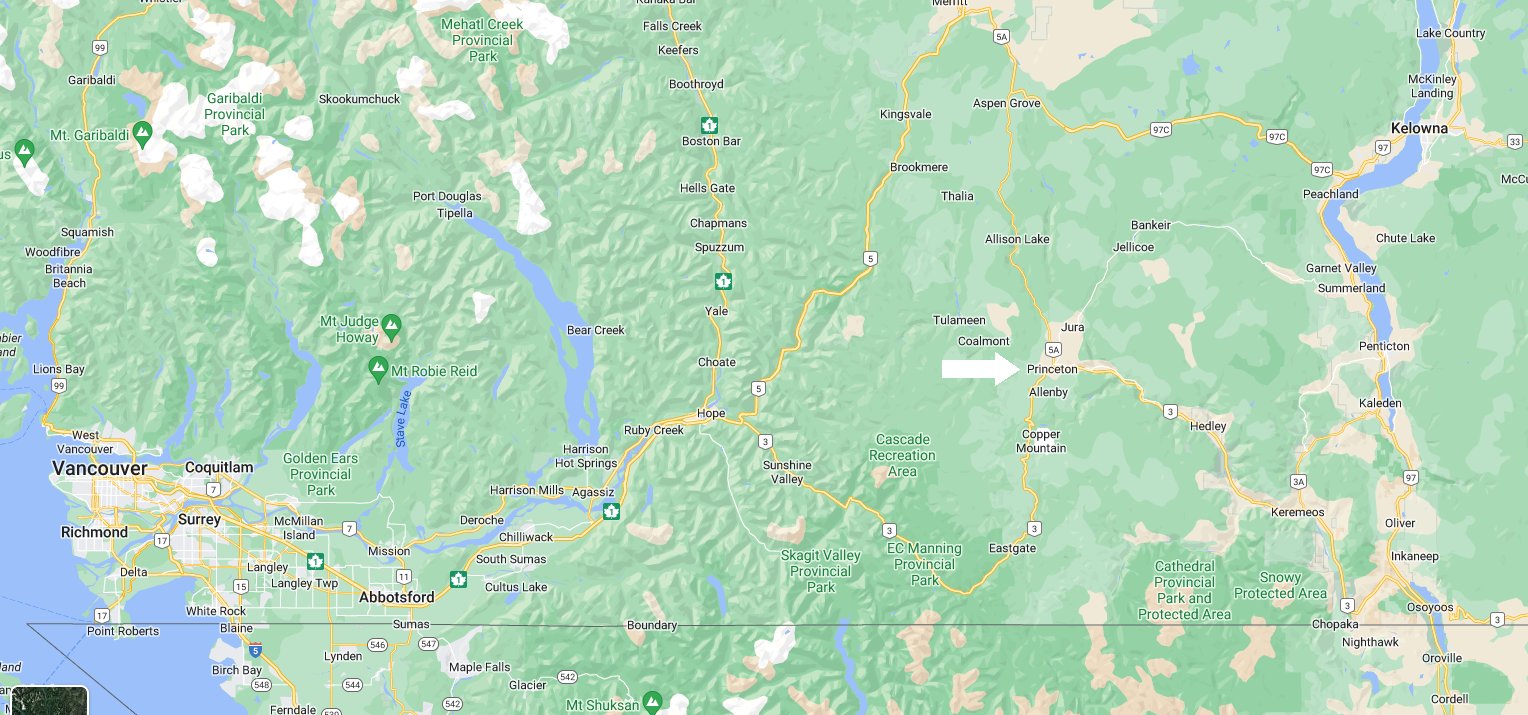

That was until the second week of June this year. It actually started a couple of weeks earlier when we began hearing about the huge number of wildfires burning in the Canadian provinces of British Columbia and especially Alberta, I even mentioned them in my post of 10 June 2023. Over a million acres of trees were consumed and the amount of smoke produced so massive that it traveled for over a thousand kilometers, a small amount even reaching the US east coast giving Philadelphia a few days of beautiful red sunsets.

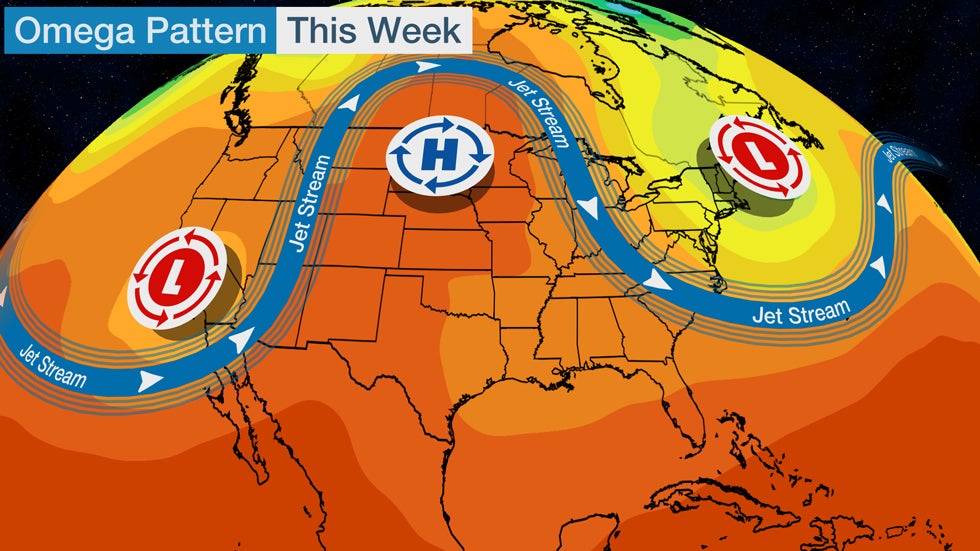

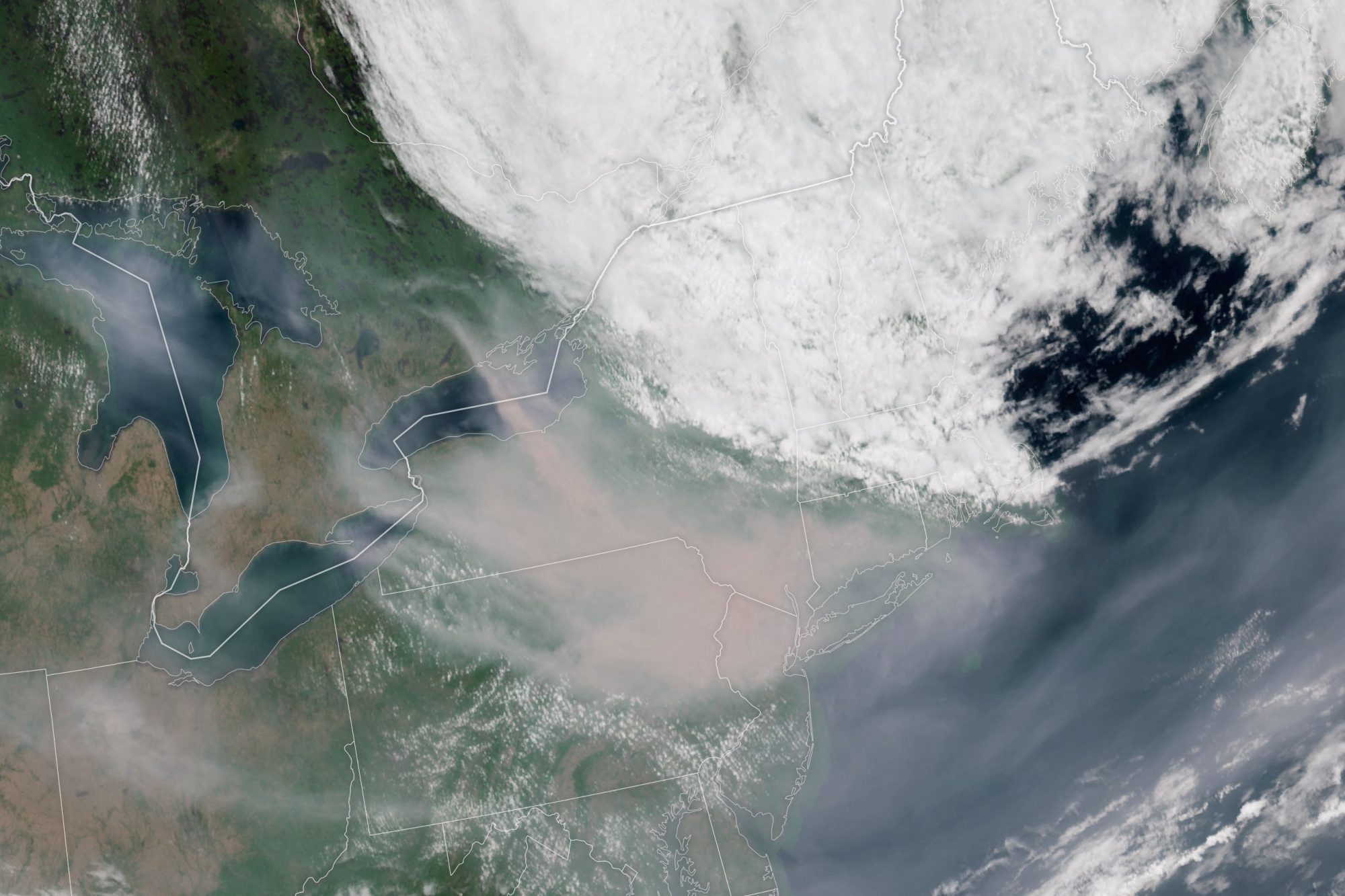

Before the fires out west could begin to quiet down more wildfires started burning in the northern part of Quebec, as many as 65 separate fires destroying as much as another two million acres of forest. Meanwhile, as the fires burned up north, the Mid-Atlantic region of the US was experiencing an unusual weather condition known as an Omega block, so named because the way that the jet stream flowing across the US resembles the Greek letter omega (Ω).

During an omega block two separate low pressure systems set up. One in the northwest region of the US while the other is in New England and the maritime provinces of Canada. In the middle between two low pressure systems a massive high pressure system stretches from Texas all the way up to Minnesota. Although unusual once set up an omega block can last several weeks or more, this year’s lasted though most of the month of May and into June. Here in the Mid-Atlantic the counterclockwise flow around the low pressure system to the northeast coupled with the clockwise flow around high pressure system to the west to funnel cool air down from Canada giving Philadelphia the nicest spring we’ve had in many years.

Until the 6th of June that is, because the omega block began bringing down the huge amounts of smoke generated by the Canadian wildfires. It was on the evening of the 6th that a distinct smell of smoke could be noticed in the air and the weather forecasts were predicting that things were going to get worse, much worse over the next few days.

Despite the fact that the night was quite cool and promised to be perfect sleeping weather we decided to close up our house and turn on the AC so as to keep the smoke outside. The next day the smell was everywhere and Philadelphia got its first air quality alert, code orange. By the evening of the 7th the air was quite thick and everything looked as if it were in a fog except that the air was very dry.

The worst day of all was Thursday the 8th of June as the air quality was declared hazardous and everyone in the city was urged to stay indoors. In the early morning hours the 24-hour news channels were declaring that New York City had the third worst air quality of any large city on Earth but by lunch NYC was officially the worst. Around three P.M. it was Philadelphia’s turn as the worst in the world as the air outside turned a dull, rusty orange and visibility dropped below a kilometer.

As the afternoon news came on the meteorologists, reporters and anchors all stared dumbfounded at the cameras as they described the conditions in the city. In a return to the days of the Covid pandemic the outside reporters were all wearing masks to protect themselves from breathing in the noxious smoke. Those meteorologists and anchors who had lived in Philadelphia for decades could only repeat, “this kind of thing doesn’t happen here!”

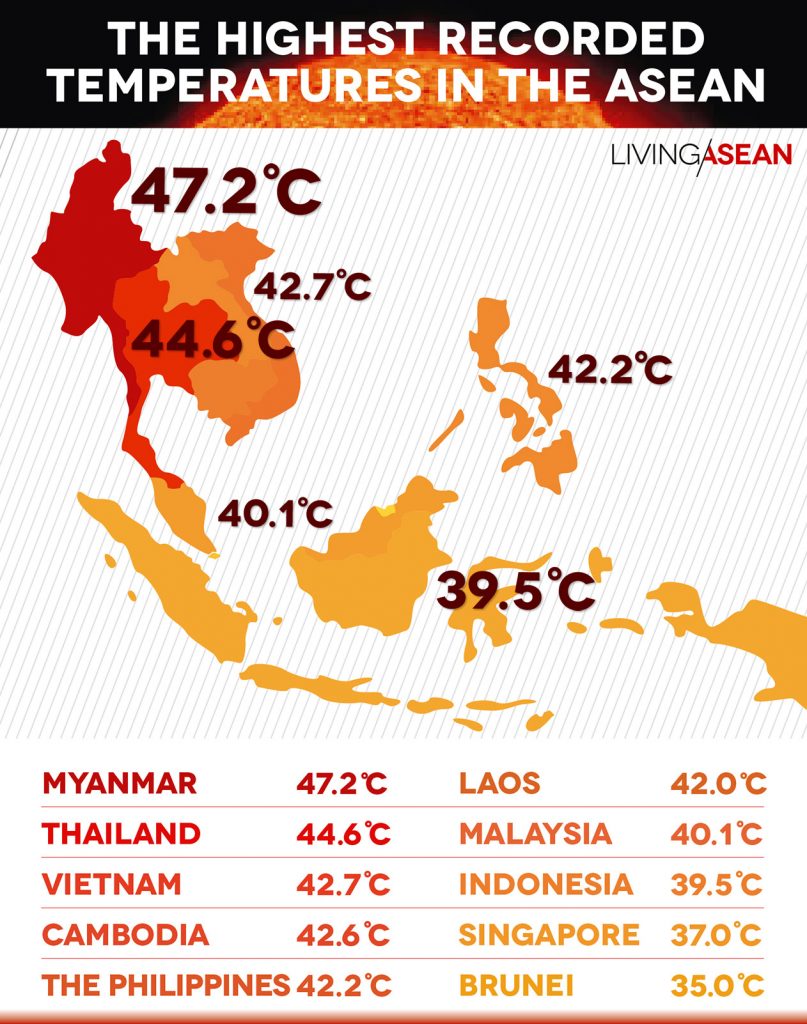

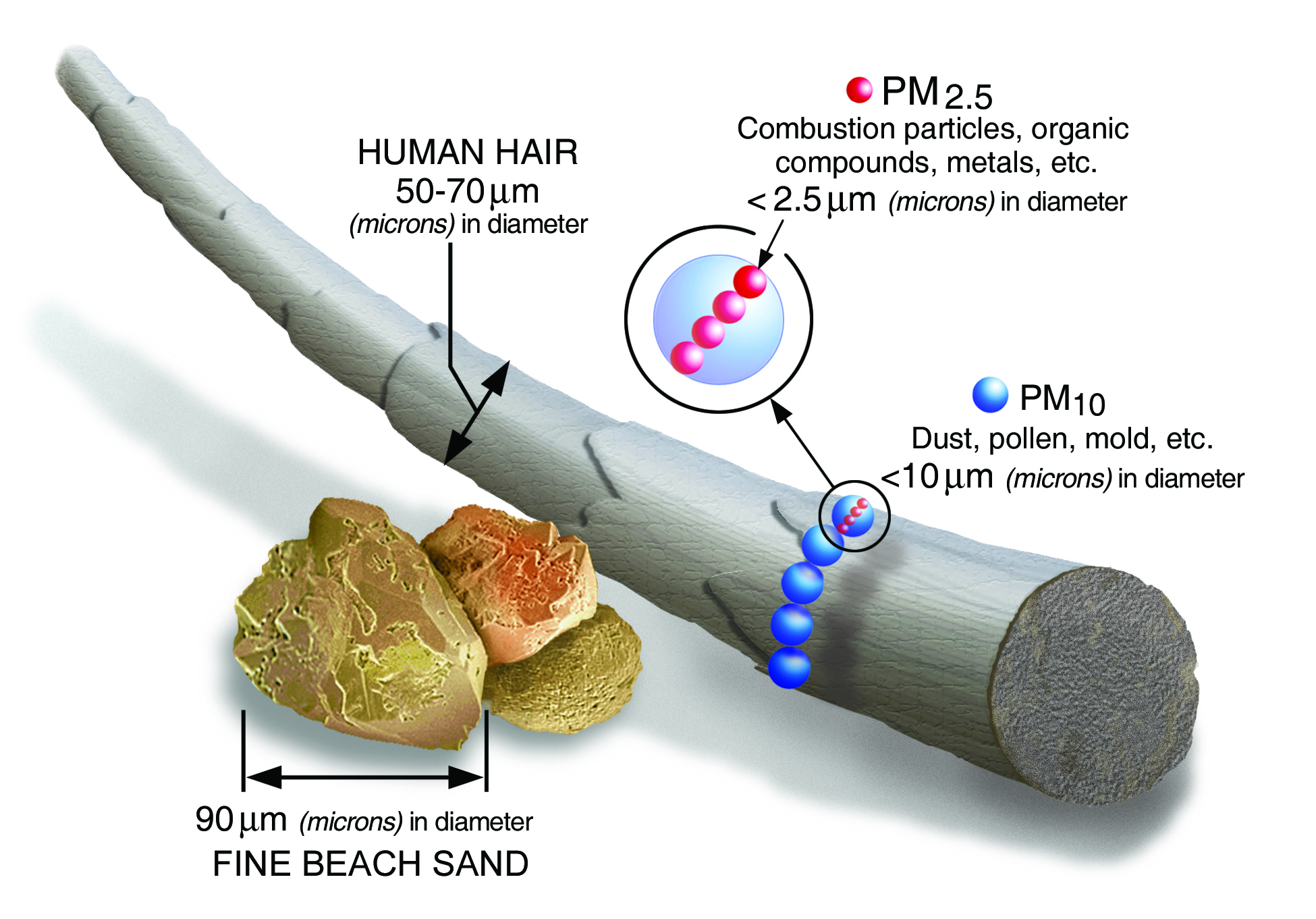

Time for a little science, this is a science blog after all. Solid particles floating in the air are obviously a nuisance and can easily cause breathing problems especially for anyone who has problems breathing to start. Particulate Matter (PM) as it’s known is classified by its size because different sized particles have different characteristics in the air and in our bodies when we breathe them in.

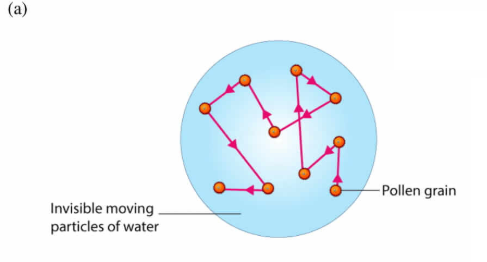

We all know how a strong wind can blow sand particles into the air and in desert regions of the world sand storms can even be deadly. At a size of around 10 micrometers sand particles along with dust, pollen and mold particles are designated as PM10. These particles are so large and heavy that they cannot stay in the air for long without a strong wind and at 10 micrometers in size they cannot penetrate deep into our lungs.

Smoke particles on the other hand, like those brought down from the Canadian wildfires, are classified as PM2.5 meaning that they are smaller than 2.5 micrometers in diameter. Such small particles can remain suspended in the air for days or even weeks and can travel on the winds for thousands of kilometers before finally falling to the ground. Even worse, PM2.5 particles are so small that they can get deep into a person’s lungs and remain there. Breathing air heavy in PM2.5 is very much like smoking a cigarette, and the long term effects on our health very similar.

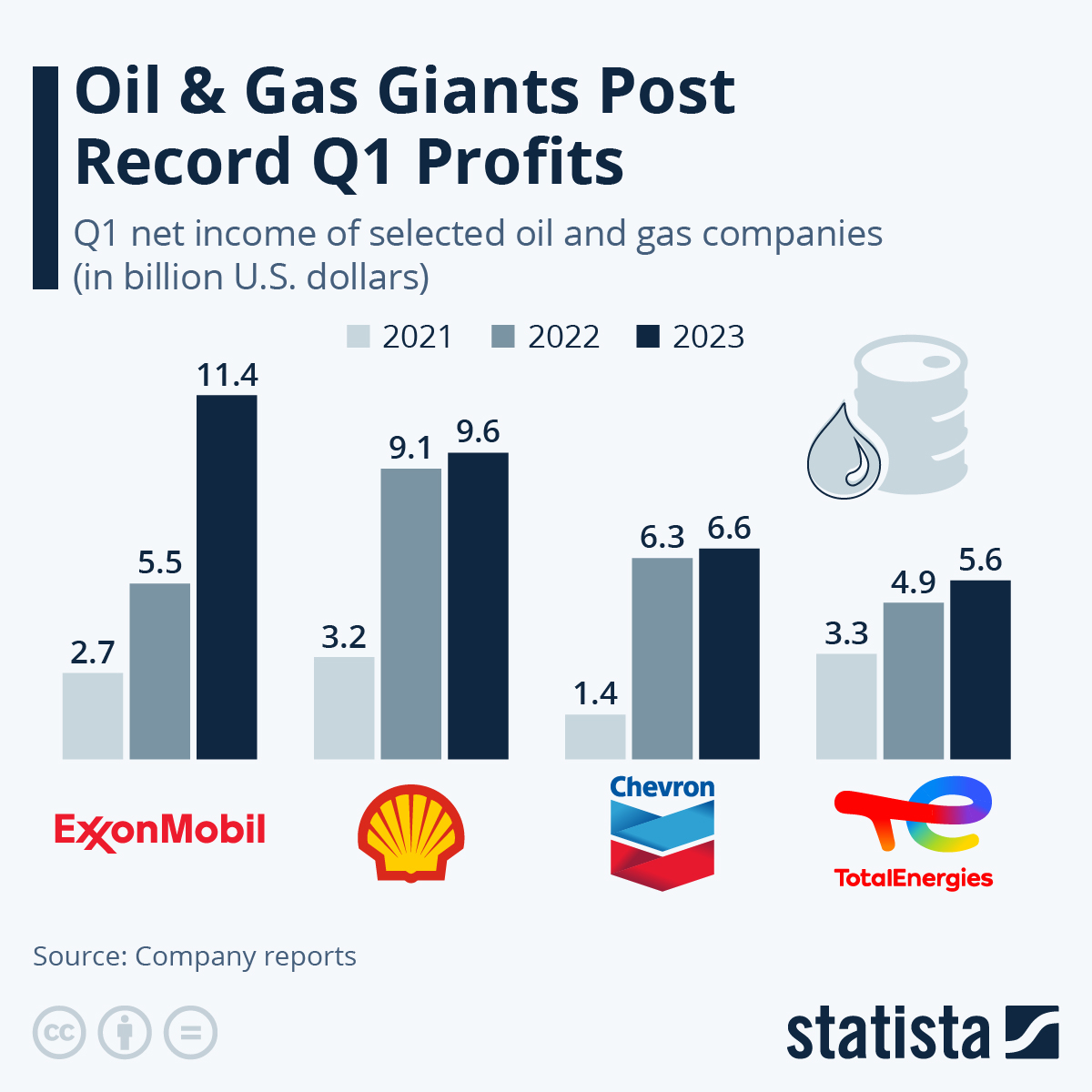

Get used to hearing the phrase PM2.5 because not only is more and more of such pollution being produced by the ever growing number of wildfires but the exhaust generated by burning fossil fuels also produces PM2.5. Indeed as the amount of CO2 in our atmosphere grows so does the amount of PM2.5 and it’s becoming an ever larger problem in cities with a lot of smog days like Delhi and Beijing.

Philadelphia never used to have such smog problems but the climate is changing and long term models show that the conditions that caused the smog on June the 8th are likely to reoccur with increasing frequency. The air quality alerts of June 6-8 are just one more example of how fossil fuels are making every part of our planet a much less healthy place to live.